Gate Ventures: الذكاء الاصطناعي والعملات المشفرة من المبتدئين إلى المحترفين (الجزء الثاني)

The previous article reviewed the development history of the AI industry and introduced the deep learning industry chain and the current market status in detail. This article will continue to interpret the relationship between Crypto x AI and some noteworthy projects in the Crypto industry Value Chain.

The Crypto x AI Relationship

Thanks to the development of ZK technology, blockchain has evolved into the idea of decentralization + trustlessness. Lets go back to the beginning of blockchain creation, which was the Bitcoin chain. In Satoshi Nakamotos paper Bitcoin, a peer-to-peer electronic cash system, he first called it a trustless, value transfer system. Later, Vitalik et al. published the paper A Next-Generation Smart Contract and Decentralized Application Platform and launched a decentralized, trustless, value-exchange smart contract platform.

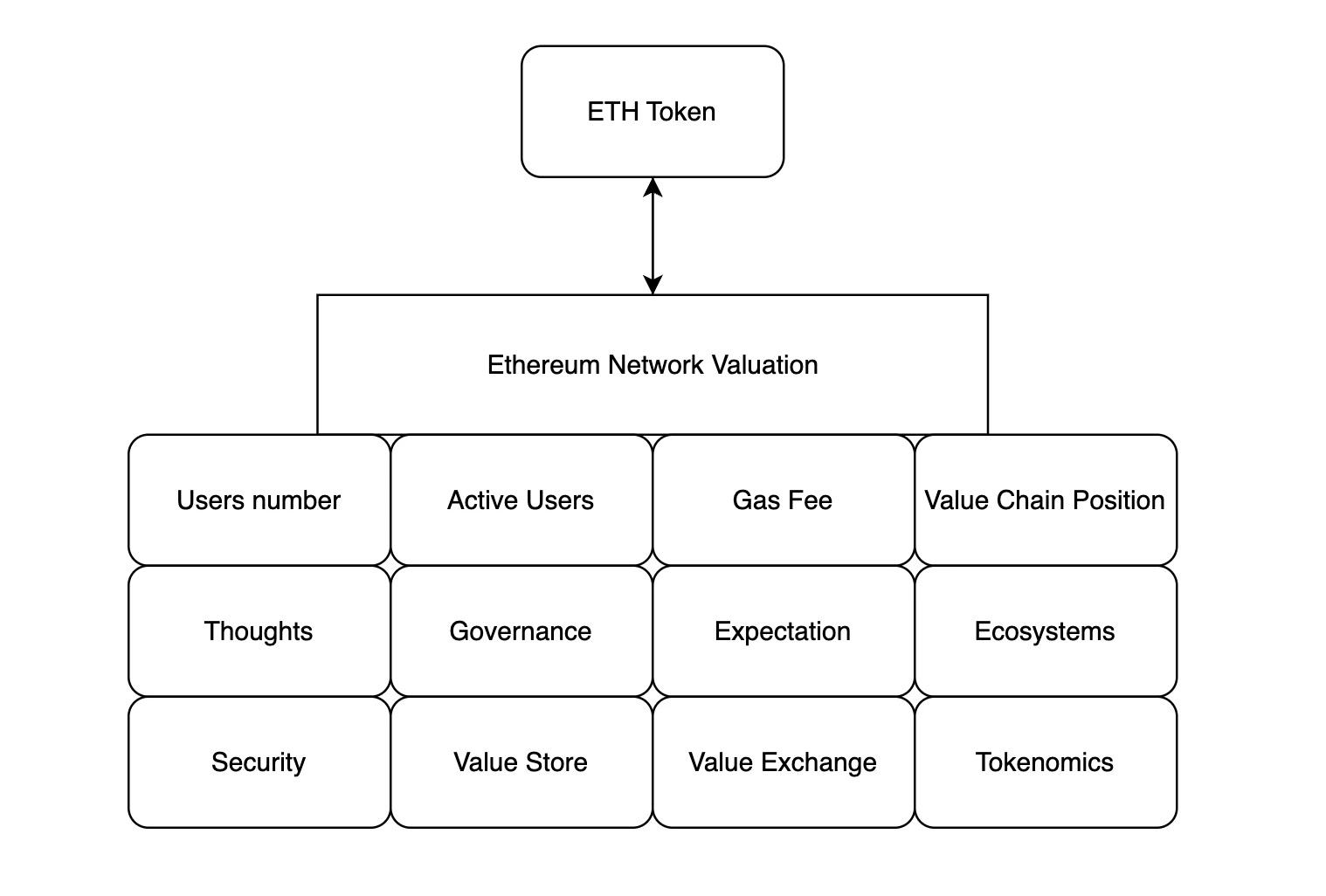

In essence, we believe that the entire blockchain network is a value network, and each transaction is a value conversion based on the underlying tokens. The value here is reflected in the form of tokens, and Tokenomics is the specific rules for reflecting the value of tokens.

In the traditional Internet, the generation of value is settled based on P/E, and has a final form of manifestation, namely the stock price. All traffic, value, and influence will form the companys cash flow. This cash flow is the final manifestation of value, and is finally converted into P/E and reflected in the stock price and market value.

The value of the network is determined by the price of the native token and multiple perspectives. Source: Gate Ventures

However, for the Ethereum network, ETH, as the embodiment of the value of the Ethereum network in multiple dimensions, can not only obtain stable cash flow through staking, but also serve as a medium for value exchange, a medium for value storage, a consumer product for network activities, etc. In addition, it also serves as a security protection layer Restaking, the Gas Fee of the Layer 2 ecosystem, etc.

Tokenomics is very important. Tokenomics can determine the relative value of the settlement of the ecosystem (that is, the native token of the network). Although we cannot set a price for each dimension, we have a multi-dimensional value, which is the price of the token. This value far exceeds the existence of corporate securities. Once the network is endowed with tokens and the tokens are circulated, it is similar to Tencents Q coins with a limited number, a deflation and inflation mechanism, representing the huge Tencent ecosystem, and as a settlement, it can also become a means of value storage and interest generation. This value is definitely far greater than the value of the stock price. And the token is the ultimate embodiment of multiple value dimensions.

Tokens are fascinating. Tokens can give value to a function or an idea. We use browsers, but we don’t consider the pricing of the underlying open source HTTP protocol. If tokens are issued, their value will be reflected in transactions. The existence of a MEME coin and the humorous ideas behind it are also valuable. Token economics can give power to any kind of innovation and existence, whether it is an idea or a physical creation. Token economics has valued everything in the world.

Tokens and blockchain technology, as a means of redefining and discovering value, are also crucial to any industry, including the AI industry. In the AI industry, issuing tokens can reshape the value of all aspects of the AI industry chain, which will encourage more people to take root in various segments of the AI industry, because the benefits they bring will become more significant, and it is not just cash flow that determines its current value. In addition, the synergy of tokens will increase the value of infrastructure, which will naturally lead to the formation of a fat protocol and thin application paradigm.

Secondly, all projects in the AI industry chain will gain capital appreciation, and this token can feed back to the ecosystem and promote the birth of a certain philosophical thought.

Token economics obviously has a positive impact on the industry. The immutable and trustless nature of blockchain technology also has practical significance in the AI industry, enabling some applications that require trust. For example, our user data can be allowed on a certain model, but it is ensured that the model does not know the specific data, that the model does not leak data, and that the real data inferred by the model is returned. When GPUs are insufficient, they can be distributed through the blockchain network. When GPUs are iterated, idle GPUs can contribute computing power to the network and rediscover surplus value. This is something that only a global value network can do.

In short, token economics can promote the reshaping and discovery of value, and decentralized ledgers can solve the trust problem and make value flow again on a global scale.

Crypto Industry Value Chain Project Overview

GPU Supply Side

Some projects in the GPU cloud computing market, Source: Gate Ventures

The above are the main project participants in the GPU cloud computing market. The one with the best market value and fundamental development is Render, which was launched in 2020. However, due to the lack of public and transparent data, we are temporarily unable to know the real-time development status of its business. Currently, the vast majority of businesses using Render are non-large model video rendering tasks.

As an old Depin business with actual business volume, Render has indeed succeeded by riding the wave of AI/Depin, but the scenarios Render faces are different from AI, so it is not strictly considered an AI sector. And its video rendering business does have certain real needs, so the GPU cloud computing market can not only be used for training and reasoning of AI models, but also for traditional rendering tasks, which reduces the risk of the GPU cloud market relying on a single market.

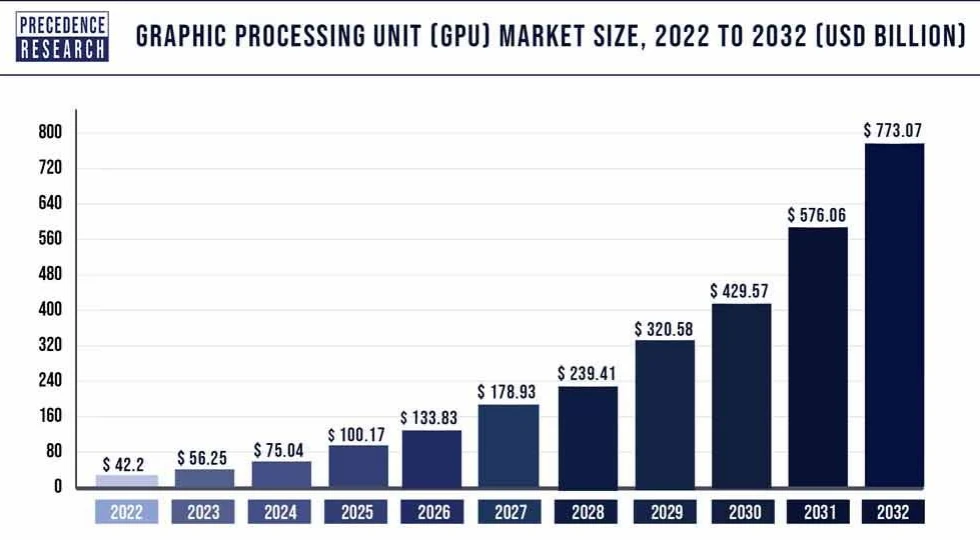

Global GPU computing power demand trend, Source: PRECEDENCE RESEARCH

In the Crypto AI industry chain, computing power supply is undoubtedly the most important point. According to industry forecasts, the demand for GPU computing power in 2024 will be approximately US$75 billion, and by 2032, the market demand will be approximately US$773 billion, with an annual compound growth rate (CAGR) of approximately 33.86%.

The iteration rate of GPU follows Moores Law (performance doubles every 18-24 months and price drops by half), so the demand for shared GPU computing power will become extremely high. Because of the explosion of the GPU market, a large number of non-latest generations of GPUs will be formed under the influence of Moores Law in the future. At this time, these idle GPUs will continue to play their value as long-tail computing power in the shared network. Therefore, we are indeed optimistic about the long-term potential and practical utility of this track. Not only the small and medium-sized model business, but also the traditional rendering business will form a relatively strong demand.

It is worth noting that many reports use low prices as the main selling point of these products to illustrate the vast space of on-chain GPU sharing and computing markets, but we want to emphasize that the pricing of the cloud computing market is not only related to the GPU used, but also to the bandwidth of data transmission, edge devices, supporting AI hosting developer tools, etc. However, in the case of the same bandwidth, edge devices, etc., due to the existence of token subsidies, part of the value is determined by the tokens and network effects, and there are indeed lower prices, which is a price advantage, but at the same time, it also has the disadvantage of slow network data transmission, which leads to slow model development and rendering tasks.

Hardware bandwidth

Some projects in the shared bandwidth track, Source: Gate Ventures

As we mentioned in the GPU supply side, the pricing of cloud computing vendors is often related to GPU chips, but also to bandwidth, cooling systems, AI supporting development tools, etc. In the AI industry chain section of the report, we also mentioned that due to the parameters and data capacity of large models, the training time of large models will be significantly affected during the data transmission process. Therefore, bandwidth is often the main reason affecting large models, especially in the field of on-chain cloud computing, where bandwidth and data exchange are slower and have a greater impact, because it is a collaborative work of users from all over the world. However, other cloud vendors such as Azure have established centralized HPC, which is more convenient for coordination and bandwidth improvement.

Menson Network architecture diagram, source: Meson

Taking the Menson Network as an example, the future envisioned by its Meson is that users can easily exchange their remaining bandwidth for tokens, and those in need can access global bandwidth in the Meson market. Users can store data in their databases, and other users can access the data stored by the nearest users, thereby accelerating the exchange of network data and speeding up model training.

However, we believe that shared bandwidth is a pseudo-concept , because for HPC, its data is mainly stored in local nodes, but for this shared bandwidth, the data is stored at a certain distance (such as 1 km, 10 km, 100 km), and the delay caused by these geographical distances will be much higher than storing data locally, because this will lead to frequent scheduling and allocation. Therefore, this pseudo-demand is also the reason why the market does not buy it. The latest round of financing of Meson Network was valued at US$1 billion. After listing on the exchange, FDV was only US$9.3 million, less than 1/10 of the valuation.

بيانات

As we have described in the deep learning industry chain, the number of parameters, computing power, and data of large models jointly affect the quality of large models. There are many market opportunities for data source companies and vector database providers, who will provide companies with various specific types of data services.

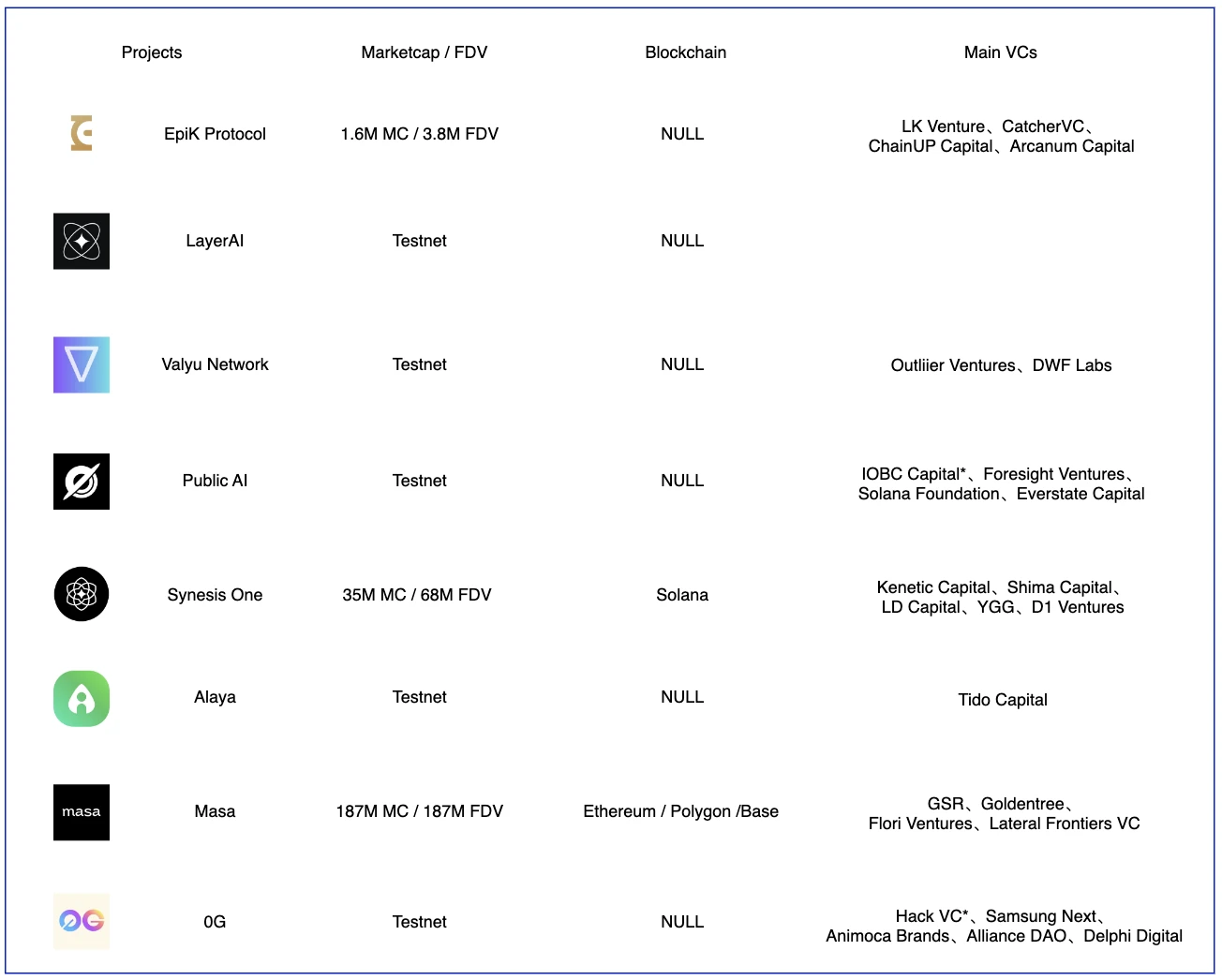

Some projects of AI data providers, Source: Gate Ventures

Currently launched projects include EpiK Protocol, Synesis One, Masa, etc. The difference is that EpiK protocol and Synesis One collect public data sources, but Masa is based on ZK technology and can collect private data, which is more user-friendly.

Compared with other traditional data companies in Web2, the advantage of Web3 data providers lies in the data collection side, because individuals can contribute their own non-private data (ZK technology can promote users to contribute private data but will not show leakage). In this way, the projects reach will become very wide, not only ToB, but also able to price the data of any user. Any past data has value, and due to the existence of token economics, the network value and price are interdependent. 0-cost tokens will also increase as the network value increases, and these tokens will reduce the cost of developers and be used to reward users. Users will have more motivation to contribute data.

We believe that this mechanism, which allows access to both Web2 and Web3, and gives almost anyone the opportunity to contribute their own data, is very easy to implement partial Mass Adoption. On the data consumption side, there are various models, with real supply and demand parties, and users can click on it at will on the Internet, and the operation difficulty is also very low. The only thing to consider is the issue of privacy computing, so data providers in the ZK direction may have a better development prospect, among which typical projects include Masa.

ZKML

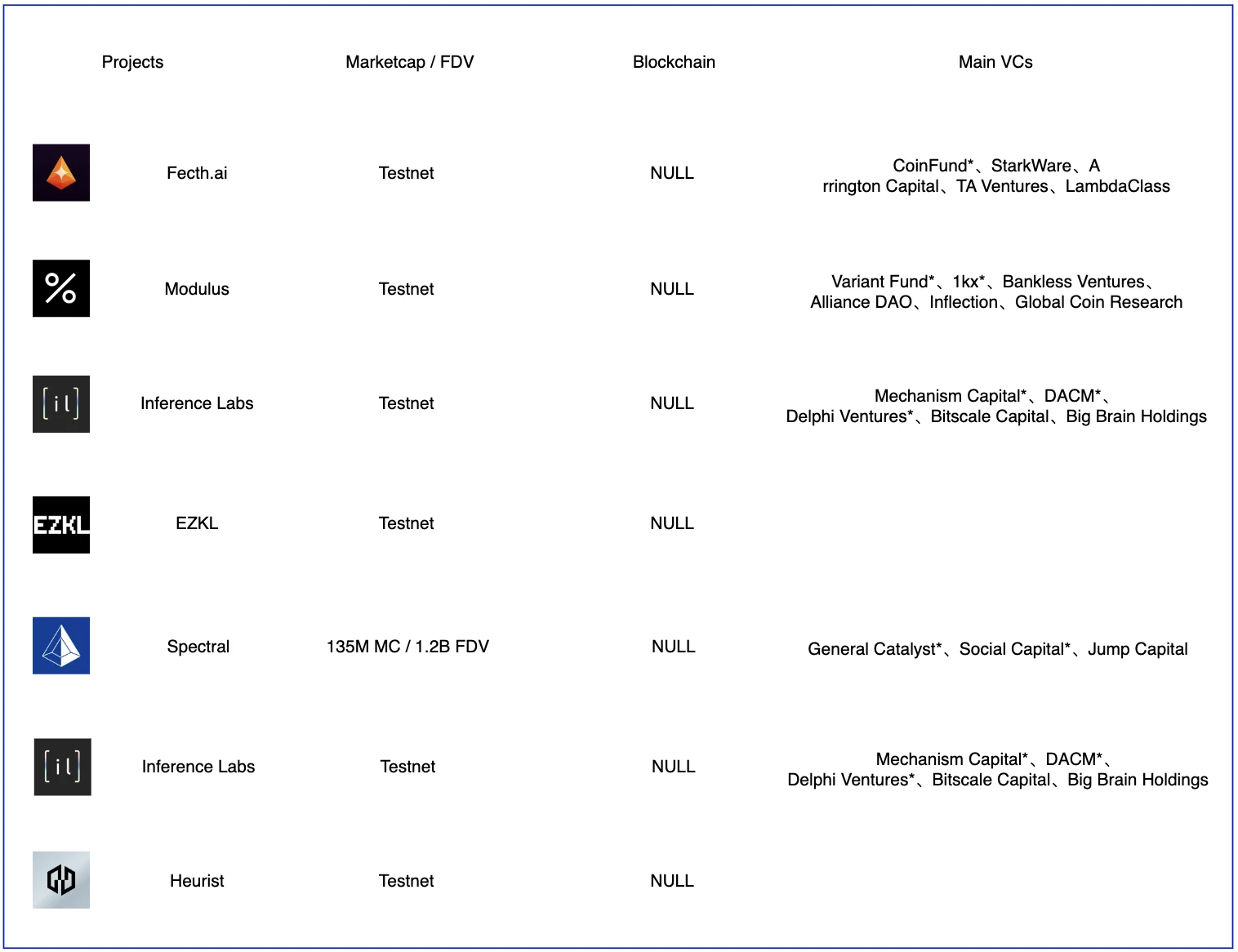

ZK Training / Inference Projects, Source: Gate Ventures

If data needs to be used for privacy computing and training, the ZK solution currently used in the industry uses homomorphic encryption technology to reason about data off-chain and then upload the results and ZK proofs, which can ensure data privacy and low-cost and high-efficiency reasoning. Reasoning on the chain is not suitable. This is why investors in the ZKML track are generally of higher quality, because this is in line with business logic.

Not only are there these projects that focus on off-chain training and reasoning in the field of artificial intelligence, there are also some general-purpose ZK projects that can provide Turing-complete ZK collaborative processing capabilities and provide ZK proofs for any off-chain calculations and data. Projects such as Axiom, Risc Zero, and Ritual are also worthy of attention. This type of project has a wider application boundary and is more tolerant to VCs.

AI Applications

AI x Crypto application landscape, source: Foresight News

The application of blockchain is similar to that of the traditional AI industry. Most of it is in the construction of infrastructure. Currently, the most prosperous development is still the upstream industrial chain, but the downstream industrial chain, such as the application end, is relatively weak.

This type of AI+blockchain application is more of a traditional blockchain application + automation and generalization capabilities. For example, DeFi can execute the best transaction and lending path based on the users ideas. This type of application is called AI Agent. The most fundamental contribution of neural networks and deep learning technology to the software revolution lies in its generalization ability, which can adapt to the different needs of different groups of people and different modal data.

We believe that this generalization capability will first benefit AI Agents. As a bridge between users and various applications, AI Agents can help users make complex on-chain decisions and choose the best path. Fetch.AI is a representative project (currently MC 2.1 billion US dollars). We use Fetch.AI to briefly describe the working principle of AI Agent.

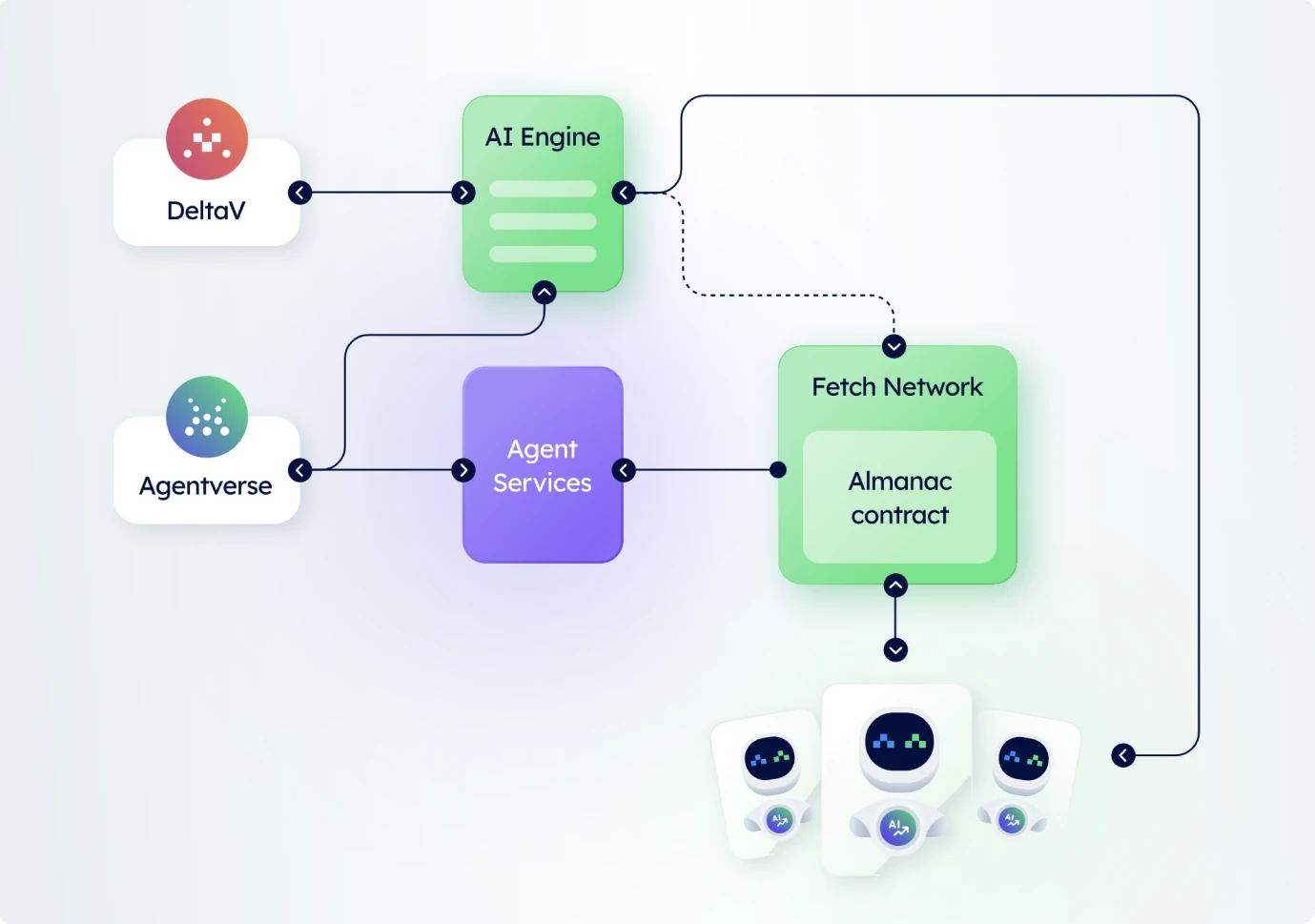

Fetch.AI architecture diagram, Source: Fetch.AI

The above picture is the architecture diagram of Fetch.AI. Fetch.AI defines AI Agent as a self-running program on the blockchain network that can connect, search and trade, and can also be programmed to interact with other agents in the network. DeltaV is a platform for creating agents, and registered agents form an agent library called Agentverse. AI Engine parses the users text and purpose, and then converts it into precise instructions that the agent can accept, and then finds the most suitable agent in Agentverse to execute these instructions. Any service can be registered as an agent, which will form an intent-guided embedded network. This network can be very suitable for embedding in applications such as Telegram, because all entrances are Agentverse, and any operation or idea entered in the chat box will be executed by the corresponding Agent on the chain. Agentverse can complete the application interaction tasks on the chain by connecting to a wide range of dAPPs. We believe that AI Agent has practical significance and has its native needs for the blockchain industry. The big model gives the application brain, but AI Agent gives the application hands.

According to current market data, Fetch.AI currently has approximately 6,103 AI Agents online. For this number of agents, there is a possibility that the price is overestimated, so the market is willing to give a higher premium for its vision.

AI public chain

Similar to public chains such as Tensor, Allora, Hypertensor, AgentLayer, etc., it is an adaptive network built specifically for AI models or agents. This is a link in the blockchain-native AI industry chain.

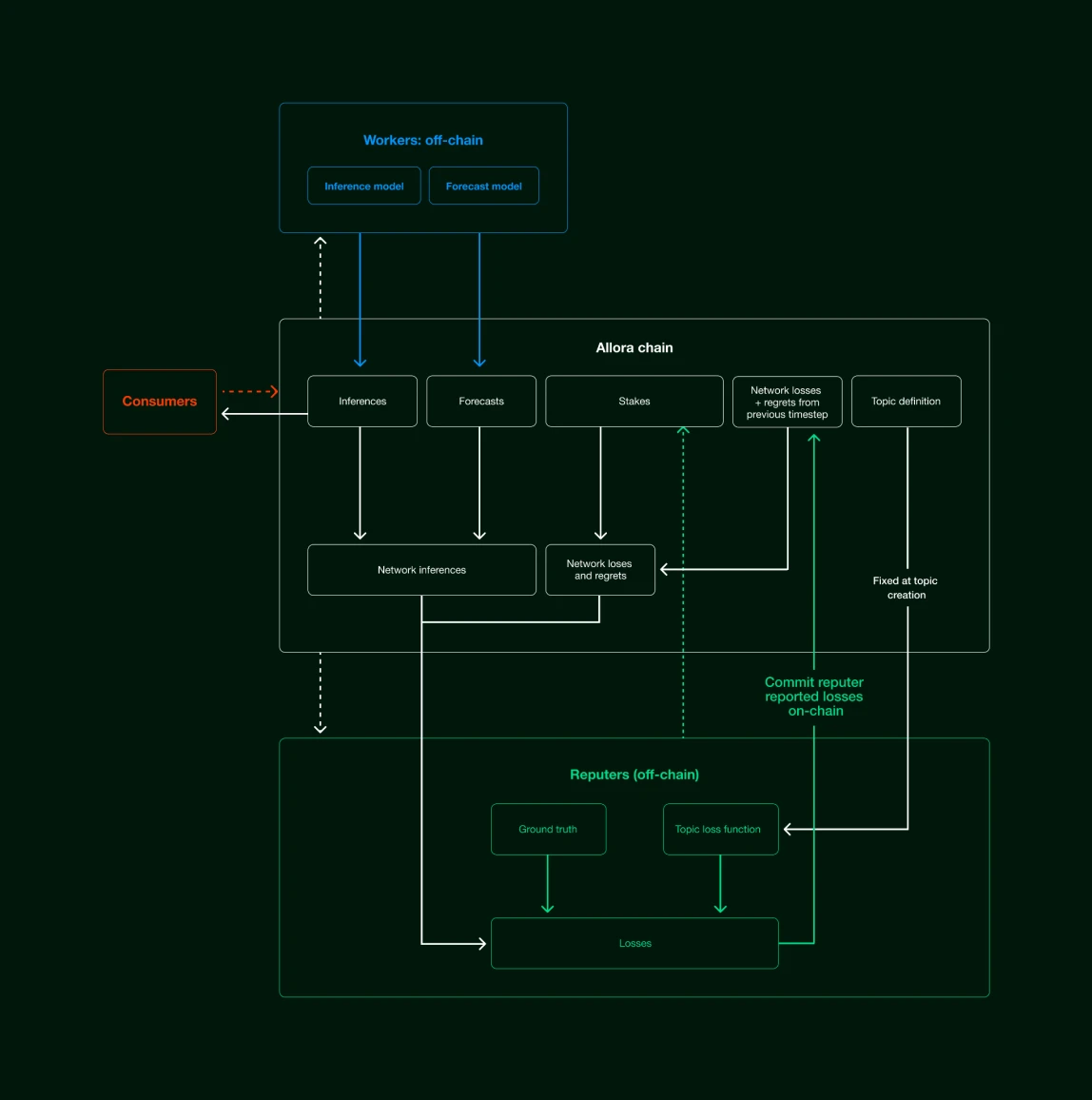

Allora Architecture, Source : Allora Network

Let’s take Allora to briefly describe the operating principles of this type of AI chain:

1. Consumers seek reasoning from Allora Chain.

2. Miners run inference models and prediction models off-chain.

3. Evaluators are responsible for evaluating the quality of reasoning provided by miners. Evaluators are usually experts in authoritative fields to accurately evaluate the quality of reasoning.

This is similar to RLHF (reinforcement learning) uploading reasoning to the chain, and the evaluators on the chain can improve the parameters of the model by ranking the results, which is also good for the model itself. Similarly, projects based on token economics can significantly reduce the cost of reasoning through the distribution of tokens, which plays a vital role in the development of the project.

Compared with the traditional AI model using RLHF algorithm, a scoring model is generally set up, but this scoring model still requires human intervention, and its cost cannot be reduced, and the participants are limited. In contrast, Crypto can bring more participants and further stimulate a wide range of network effects.

لخص

First of all, it should be emphasized that the AI development and industry chain discussions we are familiar with are actually based on deep learning technology, which does not represent the development direction of all AI. There are still many non-deep learning and promising technologies in the making. However, because the effect of GPT is so good, most of the markets attention is attracted by this effective technical path.

Some industry giants also believe that the current deep learning technology cannot achieve general artificial intelligence, so the end of this technology stack may be a dead end, but we believe that this technology already has its significance, and there is also a practical demand scenario for GPT, so it is similar to the recommendation algorithm of tiktok. Although this kind of machine learning cannot achieve artificial intelligence, it is indeed used in various information flows to optimize the recommendation process. So we still believe that this field is worth rationally and vigorously rooted.

Tokens and blockchain technology, as a means of redefining and discovering value (global liquidity), also have their advantages for the AI industry. In the AI industry, issuing tokens can reshape the value of all aspects of the AI industry chain, which will encourage more people to take root in various segments of the AI industry, because the benefits will become more significant, and it is not just cash flow that determines its current value. Secondly, all projects in the AI industry chain will gain capital appreciation, and this token can feed back to the ecosystem and promote the birth of a certain philosophy.

The immutable and trustless nature of blockchain technology also has practical significance in the AI industry. It can realize some applications that require trust. For example, our user data can be allowed on a certain model, but it is ensured that the model does not know the specific data, the model does not leak data, and the real data inferred by the model is returned. When GPUs are insufficient, they can be distributed through the blockchain network. When GPUs are iterated, idle GPUs can contribute computing power to the network, and waste things can be reused. This is something that can only be done by a global value network.

The disadvantage of GPU computer networks is bandwidth, that is, for HPC clusters, bandwidth can be solved centrally, thereby speeding up training efficiency. For GPU sharing platforms, although idle computing power can be called up and costs can be reduced (through token subsidies), the training speed will become very slow due to geographical issues, so these idle computing powers are only suitable for small models that are not urgent. In addition, these platforms lack supporting developer tools, so medium and large enterprises are more inclined to traditional cloud enterprise platforms under the current circumstances.

In conclusion, we still recognize the practical utility of the combination of AI X Crypto. Token economics can reshape value and discover a broader value perspective, while decentralized ledgers can solve the trust problem, mobilise value and discover surplus value.

مراجع

Galaxy: A Panoramic Interpretation of the Crypto+AI Track

《Full List of US AI Data Center Industry Chain》

Youre Out of Time to Wait and See on AI

تنصل:

The above content is for reference only and should not be regarded as any advice. Please always seek professional advice before making any investment.

نبذة عن شركة جيت فنتشرز

جيت فينتشرز Gate.io هي الذراع الاستثماري لشركة Gate.io، والتي تركز على الاستثمارات في البنية التحتية اللامركزية والنظم البيئية والتطبيقات التي ستعيد تشكيل العالم في عصر الويب 3.0. جيت فينتشرز works with global industry leaders to empower teams and startups with innovative thinking and capabilities to redefine social and financial interaction models.

الموقع الرسمي: https://ventures.gate.io/

تويتر: https://x.com/gate_ventures

واسطة: https://medium.com/gate_ventures

This article is sourced from the internet: Gate Ventures: AI x Crypto from Beginner to Master (Part 2)

Original author: Frank, PANews Solana has been leading in data in all dimensions recently. Previously, PANews wrote an article about the rapid development of its ecosystem liquidity staking track. In addition to these projects, the validators behind Solana seem to have always been relatively mysterious. How much can you earn as a validator on Solana? What is the level of investment? PANews has done some research on this business. The consensus mechanism adopted by Solana is a combination of Proof of History (PoH) and Proof of Stake (PoS). Token holders can pledge their tokens to validators of their choice. The more tokens a validator has, the higher the proportion of blocks he or she will lead. At the same time, users who participate in staking can also receive block rewards…