Editors Note: This article is reprinted from original content published by Turan Vural Yuki Yuminaga of Fenbushi Capital on April 5, 2024. Founded in 2015, Fenbushi Capital is Asias leading blockchain asset management firm with $1.6 billion in assets under management. The firm aims to play a significant role in shaping the future of blockchain technology across a variety of industries through research and investment. This article is an example of these efforts and represents the independent views of these authors, who have agreed to publish them here.

Data availability (DA) is a core technology for Ethereum scaling that allows nodes to efficiently verify that data is available to the network without hosting the data in question. This is critical for efficiently building rolling and other forms of vertical scaling, allowing execution nodes to ensure that transaction data is available during settlement. This is also critical for sharding and other forms of horizontal scaling (planned future updates to the Ethereum network), as nodes need to prove that transaction data (or blobs) stored in a network shard are indeed available to the network.

Several DA solutions (e.g., Celestia, EigenDA, Avail) have been discussed and released recently, all of which aim to provide a high-performance and secure infrastructure for applications to publish DAs.

The advantage of an external DA solution over L1s like Ethereum is that it provides a cheap and performant carrier for on-chain data. DA solutions usually consist of their own public chains designed to enable cheap and permissionless storage. Even with modifications, hosting data natively from the blockchain is still extremely inefficient.

Given this, we find it very intuitive to explore storage optimization solutions such as Filecoin as the basis for the DA layer. Filecoin uses its blockchain to coordinate storage transactions between users and storage providers, but allows data to be stored off-chain.

In this post, we investigate the feasibility of a DA solution built on top of a decentralized storage network (DSN). We specifically consider Filecoin, as it is the most adopted DSN to date. We outline the opportunities that such a solution would bring and the challenges that would need to be overcome to build it.

The DA layer provides the following functions to the services that depend on it:

1. User security : No node can be sure that unavailable data is available.

2. Global security : All nodes except a few agree on the availability/unavailability of data.

3. Efficient data retrieval capabilities.

All of this needs to be done efficiently to enable scaling. The DA layer provides higher performance at a lower cost on the three points above. For example, any node can request a full copy of the data to prove custody, but this is inefficient. By providing a system that provides the three points above, we implement a DA layer that provides the security required for L2 to coordinate with L1 and provides a stronger lower bound in the presence of a malicious majority.

Data hosting

Data published to a DA solution has a valid lifespan: long enough to resolve a dispute or verify a state transition. Transaction data only needs to be available long enough to verify a correct state transition, or to give a validator enough opportunity to construct a fraud proof. As of this writing, Ethereum calldata is the most commonly used solution for projects that need data availability (rollups).

Efficient data verification

Data Availability Sampling (DAS) is a standard approach to solving the DA problem. It has the added security benefit of enhancing the ability of network actors to verify state information from their peers. However, it relies on nodes to perform sampling: responding to DAS requests is necessary to ensure that mined transactions are not rejected, but nodes have no positive or negative incentives to request samples. From the perspective of the requesting sample node, there is no negative penalty for not performing DAS. For example, Celestia provides the first and only light client implementation that performs DAS, providing users with stronger security assumptions and reducing data verification costs.

Efficient access

DA needs to provide efficient data access for projects that use it. A slow DA may become a bottleneck for services that rely on it, causing inefficiencies at best and system errors at worst.

Decentralized storage network

The Decentralized Storage Network (DSN, as described in the Filecoin whitepaper) is a permissionless network of storage providers that provide storage services to network users. Informally, it allows independent storage providers to coordinate storage transactions with users who need storage services, and provides cheap and resilient data storage to users seeking cheap storage services. This is coordinated through a blockchain that records storage transactions and supports smart contract execution.

The DSN scheme is a tuple of three protocols: Put, Get, and Manage. This tuple has properties such as fault tolerance guarantees and participation incentives.

Put(data) → key

To store data under a unique key, the client performs a Put. This is done by specifying the period for which the data will be stored on the network, the number of copies of the data to be stored for redundancy, and a price negotiated with the storage provider.

Get(key) → data

The client executes Get to retrieve the data stored under the key.

Manage

Network participants invoke the management protocol to coordinate storage space and services provided by providers and to fix errors. For Filecoin, this is managed through the blockchain. The blockchain records data transactions between users and data providers and proofs that the data is stored correctly, ensuring that data transactions are maintained. Proofs that data is stored correctly are proven by publishing proofs generated by data providers in response to network challenges. Storage errors occur when storage providers fail to generate proofs of replication or proofs of spacetime in a timely manner as required by the management protocol, which results in the storage providers stake being slashed. If multiple providers host copies of the data on the network, transactions can be self-healed by finding new storage providers to fulfill the storage transaction.

DSN Opportunities

What the DA project has done so far has been to transform blockchains into hot storage platforms. Since DSN is optimized for storage, rather than transforming blockchains into storage platforms, we can simply transform storage platforms into platforms that provide data availability. The collateral provided by storage providers in the form of native FIL tokens can provide cryptoeconomic security to guarantee data storage. Finally, the programmability of storage transactions can provide flexibility in data availability terms.

The strongest motivation for converting DSN functionality to solve the DA problem is to reduce the cost of data storage under a DA solution. As discussed below, the cost of storing data on Filecoin is much cheaper than storing data on Ethereum. Given the current ETH/USD price, it costs over $3 million to write 1 GB of calldata to Ethereum, and it is clipped after 21 days. This calldata fee can account for more than half of the transaction cost of Ethereum-based rollup. However, 1 GB of storage on Filecoin costs less than $0.0002 per month. Securing DA at this price or any similar price will reduce transaction costs for users and help improve the performance and scalability of Web3.

Economic security

In Filecoin, providing storage space requires collateral. If the provider fails to fulfill the transaction or does not abide by the networks guarantees, the collateral will be slashed. Storage providers who fail to provide services will face the risk of losing their collateral and any profits they have earned.

Incentive mechanism adjustment

Many of the incentives in the Filecoin protocol align with DA goals. Filecoin provides disincentives for malicious or lazy behavior: during consensus, storage providers must proactively provide proof of storage in the form of proof of replication and proof of spacetime, continually proving the existence of storage without the assumption of an honest majority. If storage providers fail to provide proof, they will be slashed, removed from consensus, and subject to other penalties. Current DA solutions lack incentives for nodes to perform DAS and can only rely on temporary altruistic behavior to prove DA.

Programmability

The ability to customize data transactions also makes DSN an attractive DA platform. Data transactions can have different durations, allowing DSN-based DA users to pay only the DA fees they need, and fault tolerance can also be adjusted by setting the number of replicas to be stored across the network. Further customization is supported through smart contracts (Actors) on Filecoin, which are executed on FEVM. It also promotes the growing DApps ecosystem of Filecoin, from computation-first storage solutions such as Bacalhau to DeFi and liquidity staking solutions such as Glif. Retriev provides incentive-linked retrieval with licensed referees through Filecoin Actors. Filecoins programmability can be used to customize the DA requirements required by different solutions so that platforms that rely on DA do not need to pay for more DA than they need.

Challenges of DSN-based DA architecture

In our investigation, we found significant challenges that need to be overcome before building DA services on DSN. Now we discuss the feasibility of implementation, and we will focus on Filecoin.

Proof of Delay

Cryptographic proofs of the integrity of transactions and stored data on Filecoin take time to prove. When data is submitted to the network, it is divided into 32 GB sectors and wrapped. Data encapsulation is the basis for Proof of Replication (PoRep), which proves that a storage provider stores one or more unique copies of data, and Proof of Spacetime (PoST), which proves that a storage provider continuously stores a unique copy throughout the storage transaction. Encapsulation must be computationally expensive to ensure that storage providers do not encapsulate data on demand, thereby violating the required PoReP. When the protocol periodically asks storage providers for proofs of unique and continuous storage, the secure time required for encapsulation must be longer than the response window so that storage providers cannot temporarily forge proofs or copies. Therefore, it may take providers about three hours to encapsulate a sector of data.

Storage Threshold

Since packing is computationally expensive, the sector size of the packed data must be economically viable. For storage providers, the storage price must justify their packing costs, and similarly, the resulting data storage cost must be low enough (in this case, about 32 GB blocks) for users to be willing to store data on Filecoin. While smaller sectors can be packed, this will drive up the storage price to compensate storage providers. To address this problem, data aggregators collect smaller data blocks from users and submit them to Filecoin as blocks close to 32 GB. Data aggregators commit to user data through proofs of segment inclusion (PoDSI) and sub-block CIDs (pCIDs), where PoDSIs guarantee that user data is contained in sectors and pCIDs are used when users retrieve data from the network.

Consensus Constraints

Filecoin’s consensus mechanism, Expected Consensus, has a block time of 30 seconds and a finality time of several hours, which may be improved in the near future (see FIP-0086 for Filecoin’s fast finality). This is generally too slow to support the transaction throughput required by Layer 2, which relies on DA to process transaction data. Filecoin’s block time is limited by the hardware floor of storage providers. The shorter the block time, the more difficult it is for storage providers to generate and provide storage proofs, and the more error penalties storage providers receive for missing the proof window for correctly storing data. To overcome this challenge, Interstellar Consensus (IPC) subnets can be used to shorten consensus times. IPC uses a consensus similar to Tendermint and DRAND for randomness: in the case where DRAND becomes a bottleneck, we will be able to achieve 3 second block times using the IPC subnet; in the case of Tendermint bottlenecks, PoCs such as Narwhal have achieved block times in the hundreds of milliseconds.

Retrieval speed

The final hurdle is retrieval. From the above constraints, we can infer that Filecoin is suitable for cold or warm storage. However, DA data is hot and needs to support high-performance applications. Incentivized retrieval is difficult in Filecoin; data needs to be unsealed before being provided to the user end, which increases latency. Currently, fast retrieval is achieved through SLAs or storing unsealed data with sealed sectors, neither of which is reliable in the secure and permissionless application architecture on Filecoin. In particular, Retriev proofs can guarantee retrieval through FVM, and incentive-linked fast retrieval on Filecoin remains an area for further exploration.

cost analysis

In this section, we consider the costs of these design factors. We show the cost of storing 32 GB as Ethereum calldata, Celestia blobdata, EigenDA blobdata, and sectors on Filecoin (using near-current market prices).

The analysis highlights the price of Ethereum calldata: $100 million for 32 GB of data. This price shows the security cost behind Ethereum consensus and is affected by fluctuations in Ethereum and Gas prices. The Dencun upgrade introduced Proto- Danksharding (EIP-4844), introduced Blob transactions with a target of 3 blobs per block, each about 125 KB in size, and introduced variable Gas Blob pricing, thus maintaining the target number of blobs per block. This upgrade reduced the cost of Ethereum DA by 1/5: $20 million for 32 GB of blob data.

Celestia and EigenDA are significant improvements: 32 GB of data costs $8,000 and $26,000 respectively. Both are subject to market price fluctuations and reflect the cost of consensus data security to some extent: Celestia uses its native TIA token, while EigenDA uses Ether.

In all of the above cases, the data stored is not permanent. Ethereum calldata is stored for 3 weeks, blobs for 18 days, and EigenDA stores blobs for a default period of 14 days. In the current Celestia implementation, archive nodes store blob data indefinitely, but light nodes can only sample for up to 30 days.

The last two tables are a direct comparison between Filecoin and current DA solutions. The cost equivalence first lists the cost of a single byte of data on a given platform, and then shows the number of Filecoin bytes that can be stored in the same amount of time at the same cost.

This suggests that Filecoin is orders of magnitude cheaper than current DA solutions, costing only a fraction of a cent to store the same amount of data in the same amount of time. Unlike Ethereum nodes and nodes from other DA solutions, Filecoin nodes are optimized to provide storage services, and their proof system allows nodes to prove storage instead of replicating storage across every node in the network. Without considering storage provider economics (such as the energy cost of encapsulating data), the basic overhead of the Filecoin storage process is negligible. Compared to Ethereum, this suggests that there is a market opportunity of up to millions of dollars per GB for systems that can provide secure and high-performance DA services on Filecoin.

吞吐量

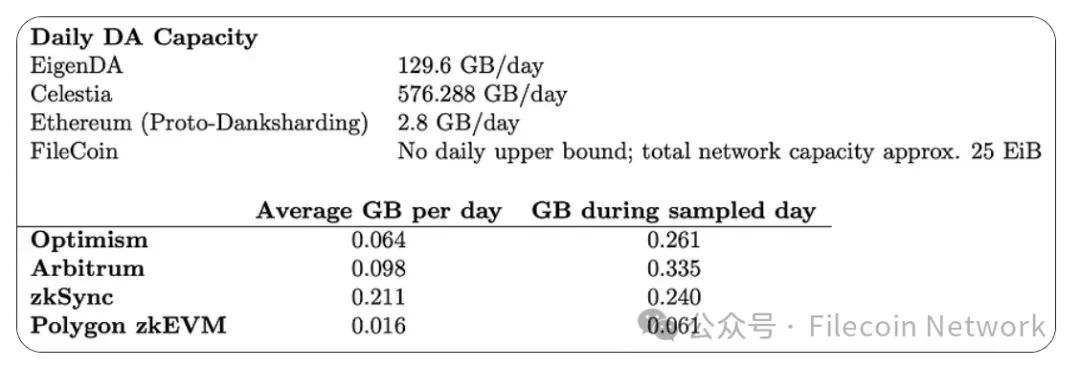

Below, we will consider the capacity of DA solutions and the demand generated by major Layer 2 rollups.

Since the Filecoin blockchain is organized in tipsets, with multiple blocks at each block height, the number of transactions that can be made is not limited by consensus or block size. Filecoin’s strict data constraint is its network-wide storage capacity, not the capacity allowed by consensus.

For daily DA demand, we get data from Rollups DA and Execution provided by Terry Chung and Wei Dai, which includes daily averages over 30 days and data for a single sampling day. This way, we can consider the average demand without ignoring deviations from the average (for example, Optimisms demand on August 15, 2023 was about 261,000,000 bytes, more than four times its 30-day average of 64,000,000 bytes).

From this choice, we can see that while there is an opportunity to reduce DA costs, DA requirements need to increase significantly to effectively utilize Filecoins 32GB sector size. While it would be wasteful to pack 32GB sectors with less than 32GB of data, we can do so while still gaining cost advantages.

Architecture

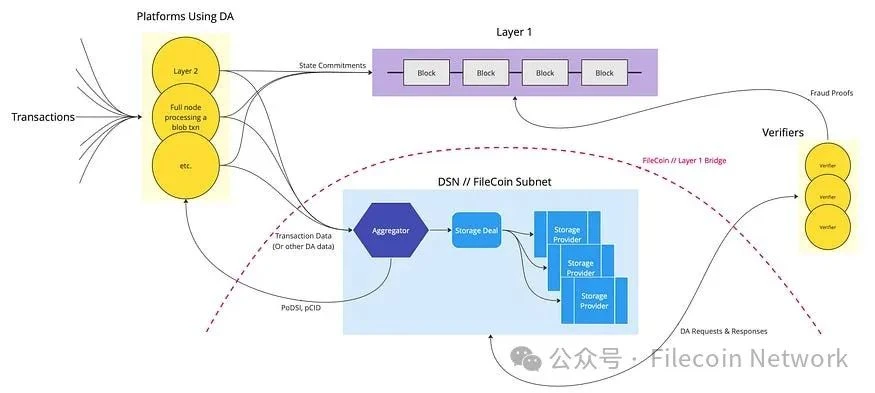

In this section, we will consider the technical architecture that would be possible if we were to build it today. We will consider this architecture in the context of an arbitrary L2 application and the L1 chain that the L2 serves. We do not consider Filecoin as an example L1 because the solution is an external DA solution, just like Celestia and EigenDA.

成分

Even at a high level, DAs on Filecoin will leverage many different features of the Filecoin ecosystem.

Transactions : Downstream users conduct transactions on a platform that requires DA, which may be L2.

Platforms using DA : These platforms use DA as a service, which can be L2 publishing transaction data to Filecoin DA or a commitment to L1 (such as Ethereum).

Layer 1 : This is any L1 that contains data commitments pointing to a DA solution. This could be Ethereum, supporting an L2 that uses the Filecoin DA solution.

Aggregator : The front end of the Filecoin-based DA solution is an aggregator, which is a centralized component that receives transaction data from L2 and other DA users and aggregates them into 32 GB sectors that fit into the package. Although the simple proof of concept will include a centralized aggregator, platforms using the DA solution can also run their own aggregators. For example, as an auxiliary device for the L2 sorter, the centralization of the aggregator is similar to the L2 sorter or EigenDAs decentralized one. Once the aggregator compiles a payload close to 32 GB, it enters into a storage agreement with a storage provider to store the data. Users are guaranteed that their data will be included in the sector in the form of PoDSI (Proof of Data Segment Inclusion), and a pCID is used to identify their data once the data enters the network. This pCID will be included in the state commitment on L1 to reference the data supporting the transaction.

驗證者 : Validators request data from storage providers to ensure the integrity of state commitments and build fraud proofs, which are submitted to L1 in the event of provable fraud.

Storage Deals : Once the aggregator has compiled a payload of close to 32 GB, the aggregator enters into a storage deal with a storage provider to store the data.

Publishing a blob (Put) : To initiate a Put, the DA client submits a blob containing the transaction data to the aggregator. This can be done off-chain or on-chain through an on-chain aggregation oracle. To confirm receipt of the blob, the aggregator returns a PoDSI to the client, proving that its blob is included in the aggregated sector that will be submitted to the subnet, along with a pCID (sub-fragment content identifier). Once the blob is available on Filecoin, the client and other interested parties will use it to reference the blob.

Data transactions will appear on-chain within minutes of the transaction being reached. The biggest barrier to latency is the encapsulation time, which can take up to three hours. This means that although the transaction has been completed and the user can be confident that the data will appear on the network, there is no way to ensure that the data is queryable until the encapsulation process is complete. The Lotus client has a fast retrieval feature, where an unencapsulated copy of the data is stored along with the encapsulated copy, and can be served as soon as the unencapsulated data is transmitted to the data storage provider, as long as the retrieval transaction does not rely on proof that the encapsulated data appears on the network. However, this feature is at the discretion of the data provider and is not cryptographically guaranteed as part of the protocol. To provide fast retrieval guarantees, changes to the consensus and penalty/incentive mechanisms are needed to enforce it.

Retrieving a blob (Get) : Retrieval is similar to a put operation. A retrieval transaction is required, which will appear on-chain within a few minutes. Retrieval latency will depend on the terms of the transaction and whether an unsealed copy of the data is stored for fast retrieval. In the case of fast retrieval, latency will depend on network conditions. Without fast retrieval, the data needs to be unsealed before being served to the client, which takes the same amount of time as sealing, about three hours. Therefore, without optimization, our maximum round-trip time is six hours, which requires significant improvements to data services before this can become a viable DA or fraud proof system.

DA Proof : DA proof can be divided into two steps: through the PoDSI provided when submitting data to the aggregator during the transaction process, and then through the continuous commitment of PoRep and PoST provided by the Filecoin consensus mechanism. As mentioned above, PoRep and PoST provide planned and provable guarantees for data custody and persistence.

This solution will make heavy use of bridges, as any client that relies on DA (whether building proofs or not) will need to be able to interact with Filecoin. For pCIDs included in state transitions published to L1, validators can do a preliminary check to ensure that no false pCIDs have been submitted. There are several ways to do this, for example, through an Oracle that publishes Filecoin data on L1 or through a validator to verify that there is a data transaction or sector corresponding to the pCID. Similarly, verification of validity or fraud proofs published to L1 may also require the use of a bridge to ensure the validity or fraud of the proof. Currently available bridges are Axelar and Celer.

Security Analysis

Filecoin integrity is achieved by slashing collateral. Collateral can be slashed in two cases: storage errors or consensus errors. Storage errors refer to the inability of storage providers to provide proofs (PoRep or PoST) of stored data, which is related to the lack of data availability in our model. Consensus errors refer to malicious behavior in consensus, which is the protocol that manages the transaction ledger, and FEVM is abstracted from the transaction ledger.

-

Sector errors are penalties for failing to post continuous proof of storage. Storage providers have a one-day grace period during which they will not be penalized for storage errors. After 42 days of a sector error, the sector will be terminated. Fees incurred are destroyed.

BR(t) = ProjectedRewardFraction(t) * SectorQualityAdjustedPower

-

Sector termination occurs if a sector has been in error for 42 days or if a storage provider intentionally terminates a transaction. The termination fee is equal to the highest amount the sector earned before termination, capped at 90 days of revenue. Unpaid transaction fees are refunded to users. Incurred fees are destroyed.

max(SP(t), BR(StartEpoch, 20 d) + BR(StartEpoch, 1 d) * terminationRewardFactor * min(SectorAgeInDays, 140))

-

At the termination of a trade, there is a Storage Market Actor slashing, which is a slashing of the collateral provided by the storage provider following the trade.

The security provided by Filecoin is very different from the security of other blockchains. While blockchain data is typically secured through consensus, Filecoin consensus only secures the transaction ledger, not the data referenced by transactions. Data stored on Filecoin must be secure enough to incentivize storage providers to provide storage. This means that data stored on Filecoin is secured through error penalties and business incentives (such as user reputation). In other words, data errors on the blockchain are equivalent to violations of consensus, which undermine the security of the blockchain or its notion of transaction validity. Filecoin is fault-tolerant in its data storage and therefore only uses consensus to secure its transaction ledger and transaction-related activities. The cost for storage providers failing to fulfill their data transactions is a penalty of up to 90 days worth of storage rewards, and the loss of the collateral they provided to secure the transaction.

Therefore, the cost of a data withholding attack from a Filecoin provider is simply the opportunity cost of the retrieval transaction. Data retrieval on Filecoin relies on user-paid fees to incentivize storage providers. However, not responding to data retrieval requests does not negatively impact storage providers. To reduce the risk of a single storage provider ignoring or rejecting a data retrieval transaction, data on Filecoin can be stored by multiple storage providers.

Since the economic security behind Filecoin data is much lower than blockchain-based solutions, protection against data manipulation must also be considered. Data manipulation is protected through the Filecoin proof system. Data is referenced by CIDs, through which data corruption can be detected immediately. Therefore, data providers cannot provide corrupted data, because it is easy to verify that the acquired data matches the requested CID. Data providers cannot store corrupted data in the place of uncorrupted data. Upon receiving user data, the provider must provide proof of the correct encapsulation of the data sector to initiate a data transaction (check this option). Therefore, storage transactions cannot be initiated with corrupted data. During the validity period of the storage transaction, PoST is provided to prove the custody clearance (note that this proves both the custody of the encapsulated data sector and the custody since the last PoST). Since PoST relies on the encapsulation sector when the proof is generated, a corrupted sector will result in a forged PoST, resulting in a wrong sector. Therefore, storage providers can neither store nor provide corrupted data, cannot be rewarded for providing services for uncorrupted data, and cannot avoid being punished for tampering with user data.

Security can be enhanced by increasing the collateral that storage providers commit to the Storage Market Actor, which is currently determined by the storage provider and the user. If we assume that this collateral amount is high enough (e.g. the same as the collateral of Ethereum validators) to incentivize providers not to default, then we can imagine what else needs to be secured (although this would be extremely capital inefficient since this collateral is required to secure each traded blob or aggregated blob sector). Data providers can now choose to make their data unavailable for up to 41 days before the Storage Market Actor terminates the storage deal. Assuming data deals are short, we can assume that the data is unavailable until the last day of the deal. In the absence of coordination by malicious actors, this can be mitigated by replicating across multiple storage providers so that the data service can continue to be provided.

We can think of the cost to an attacker of overturning consensus, either by accepting false proofs or rewriting the ledger history to remove transactions from the order book without penalizing responsible storage providers. However, it is worth noting that in the case of this security breach, the attacker can manipulate Filecoin’s ledger at will. An attacker would need to have at least a majority stake in the Filecoin chain to carry out such an attack. Stake is related to the storage provided to the network, and with the current Filecoin chain at 25 EiB (10 ¹⁶ bytes), a malicious actor would need at least 12.5 EiB to provide their own chain to win the fork choice rule. This can be further mitigated through slashing associated with consensus errors, with the penalty being the loss of all staked collateral and block rewards, and suspension from participating in consensus.

Off-topic: Preventing attacks on other DA solutions

While the above case demonstrates Filecoin’s shortcomings in protecting data from withholding attacks, it is not the only example.

-

以太坊 : Generally speaking, the only way to guarantee a response to a request to the Ethereum network is to run a full node. Therefore, a full node does not need to satisfy data retrieval requests outside of consensus. Constructions such as PeerDAS introduce a peer scoring system for node responses to data retrieval, where nodes with sufficiently low scores (essentially DA reputation) may be isolated from the network.

-

塞拉斯蒂婭 : Celestia has stronger per-byte security than the Filecoin structure and is resistant to withholding attacks, but the only way to take advantage of this security is to host a full node. Requests made to Celestia infrastructure that are not internally owned and operated will be reviewed without penalty.

-

特徵DA : Similar to Celestia, any service can run an EigenDA Operator node to ensure retrieval of its own data. Therefore, any data retrieval request outside the protocol is subject to review. Also note that EigenDA has a centralized and trusted distributor that is responsible for data encoding, KZG commitments, and data distribution, similar to our aggregator.

Retrieval Security

Retrievability is necessary for DA. Ideally, market forces would incentivize economically rational storage providers to accept retrieval deals and compete with other providers to drive down prices for users. Assuming this is enough for data providers to offer retrieval services, it is reasonable to require higher security given the importance of DA.

Currently, retrieval cannot be guaranteed via the economic security described above. This is because it is cryptographically difficult to prove in a trust-minimized way that the user side did not receive the data (where the user side needs to disprove the storage providers claim to have sent the data). In order to ensure the safety of retrieval via Filecoins economic security, protocol-native retrieval guarantees are required. With minimal changes to the protocol, this means that retrieval needs to be associated with a sector error or transaction termination. Retriev is a proof-of-concept that is able to provide data retrieval guarantees by using trusted referees to mediate data retrieval disputes.

Supplement: Search for other DA solutions

As mentioned above, Filecoin lacks the protocol-native retrieval guarantees necessary to prevent selfish behavior by storage (or retrieval providers). In the case of Ethereum and Celestia, the only way to guarantee that protocol data can be read is to self-host a full node, or trust the SLA of an infrastructure provider. As a Filecoin storage provider, guaranteed retrieval is not trivial. The analogous setup in Filecoin is to become a storage provider (with significant infrastructure costs) and successfully accept the same storage deal as a storage provider posted by a user, at which point people will pay to provide storage for themselves.

Latency Analysis

Filecoin latency is determined by multiple factors, such as the network, topology, storage provider user configuration, and hardware capabilities. We provide a theoretical analysis that discusses these factors and builds on the performance that can be expected.

Due to the design of Filecoin’s proof system and the lack of retrieval incentives, Filecoin is not optimized to provide high-performance round-trip latency from the initial publication of data to the initial retrieval of data. High-performance retrieval on Filecoin is an active area of research that is constantly changing as storage providers improve capabilities and Filecoin introduces new features. We define “round trip” as the time from submitting a data transaction to the earliest data submitted to Filecoin can be downloaded.

Block time

In Filecoin’s expected consensus, data transactions can be completed within a 30-second block time. 1 hour is the typical confirmation time for sensitive data on the chain (such as coin transfers).

data processing

Data processing times vary by storage provider and configuration. Using standard storage provider hardware, the encapsulation process takes 3 hours. Storage providers often reduce this 3-hour time through special client configurations, parallelization, and investment in more powerful hardware. This variation also affects the duration of sector unsealing, which can be circumvented entirely by fast retrieval options in Filecoin clients such as Lotus. The fast retrieval setting stores an unencapsulated copy of the data along with the encapsulated data, greatly speeding up retrieval times. Based on this, we can assume a worst-case latency of 3 hours from accepting a data transaction to the data being available on-chain.

Conclusion and Future Directions

This article explores how to build DA using the existing DSN, namely Filecoin. We consider the requirements of DA as a key element of Ethereums scaling infrastructure. We consider the feasibility of building DA on DSN based on Filecoin, and use it to explore the opportunities that solutions on Filecoin will bring to the Ethereum ecosystem, or any opportunities that will benefit from a cost-effective DA layer.

Filecoin demonstrates that DSN can significantly improve data storage efficiency in blockchain-based decentralized systems, saving $100 million for every 32 GB of data written at current market prices. Although the demand for DA is not yet sufficient to fill a 32 GB sector, the cost advantage of DA remains if empty sectors are packed. Although the current storage and retrieval latency on Filecoin is not suitable for hot storage needs, specific operations of storage providers can provide reasonable performance to ensure that data is available within 3 hours.

The increased trust in Filecoin storage providers can be adjusted through variable collateral, such as in EigenDA. Filecoin extends this adjustable security to allow for a large number of replicas to be stored on the network, adding adjustable Byzantine fault tolerance. In order to robustly prevent data withholding attacks, the problem of guaranteed and high-performance data retrieval needs to be solved, but as with any other solution, the only way to truly guarantee retrievability is to self-host a node or trust the infrastructure provider.

We see opportunities for DA in further development of PoDSI, which could (along with Filecoins current proofs) replace DAS to guarantee data inclusion in larger sealed sectors. Depending on the situation, this could make slow data turnover tolerable, as fraud proofs can be issued in 1 day to 1 week, while DA can guarantee on demand. PoDSI is still a new technology and under heavy development, so we dont yet know what efficient PoDSI will look like, nor the mechanisms needed to build systems around it. Since there are already solutions for computing on Filecoin data, it may not be far-fetched to have solutions for computing PoDSI on sealed or unsealed data.

As the DA and Filecoin space evolves, new combinations of solutions and supporting technologies may lead to new proofs of concept. As shown by Solana’s integration with the Filecoin network, DSN has potential as a scaling technology. The cost of data storage on Filecoin presents an open opportunity with a lot of room for optimization. While the challenges discussed in this article are presented in the context of supporting DA, their ultimate solutions will build a host of new tools and systems outside of DA.

The relevant chart data comes from Filecoin spec, EIP-4844, EigenDA, Celestia implementation, Celenium, Starboard, file.app, Rollups DA and Execution, as well as the current approximate market price.

This article is sourced from the internet: A Deep Dive into Data Availability on Filecoin

原作者:滕岩 原譯:魯夫、前瞻性新聞 我聽過的一個比喻是,生成式人工智慧意味著在地球上發現了一個新大陸,那裡有 1000 億超級智慧的人願意免費工作。難以置信,不是嗎? 21世紀將被稱為人類的人工智慧時代。我們正在見證新一代技術的早期發展,它將比電力的發現、核能的利用,甚至火的利用更深刻地改變社會。別相信我的話,英國國王這樣說:多麼美好的時光啊!誰知道向演算法輸入大量數據並疊加巨大的計算資源將使人工智慧開發出令人驚嘆的新功能?它現在可以綜合、推理,實際上…