Delphi Digital: DeAI'nın fırsatları ve zorluklarının derinlemesine analizi

Orijinal yazar: PonderingDurian, Delphi Dijital Araştırmacısı

Orijinal çeviri: Pzai, Foresight News

Kripto paraların özünde ekonomik teşvikler içeren açık kaynaklı yazılımlar olduğu ve yapay zekanın yazılımların yazılma biçimini değiştirdiği göz önüne alındığında, yapay zekanın tüm blok zinciri alanı üzerinde büyük bir etkisi olacak.

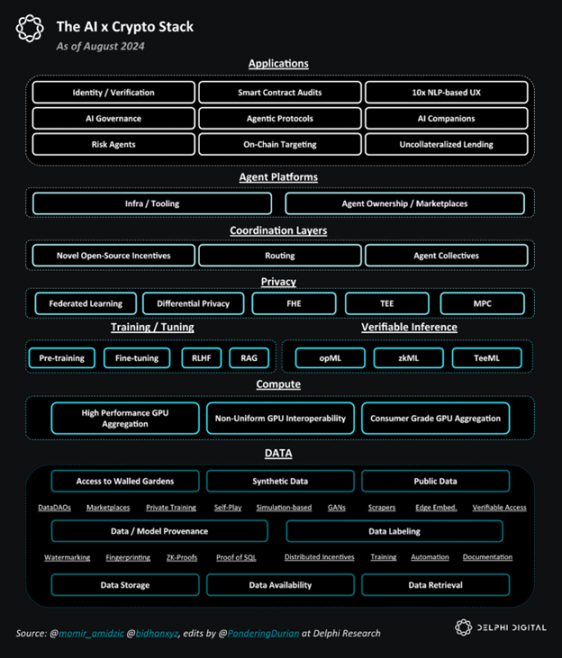

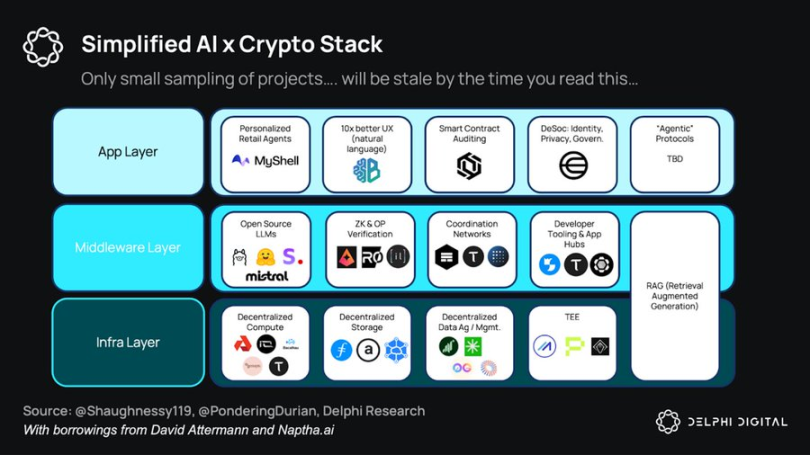

AI x Crypto Genel Yığın

DeAI: Fırsatlar ve Zorluklar

Bana göre, DeAI'nın karşı karşıya olduğu en büyük zorluk altyapı katmanında yatıyor, çünkü temel modeller oluşturmak çok fazla sermaye gerektiriyor ve veri ve bilgi işlem için ölçeklenebilirlik getirileri de yüksek.

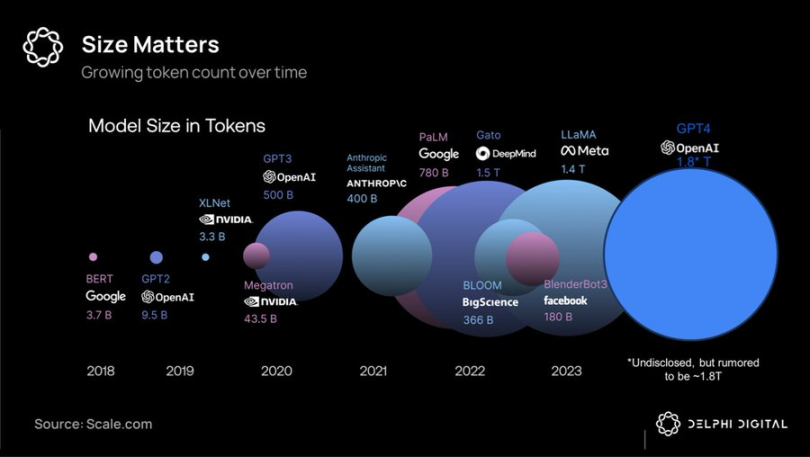

Ölçekleme yasası göz önüne alındığında, teknoloji devlerinin doğal bir avantajı var: Web2 aşamasında tüketici talebini bir araya getirerek elde ettikleri tekel kârlarından büyük karlar elde eden ve bu karları yapay olarak düşük oranların olduğu on yıllık dönemde bulut altyapısına yatıran internet devleri, şimdi veri ve bilişimde (yapay zekanın temel unsurları) hakimiyet kurarak yapay zeka pazarını ele geçirmeye çalışıyor:

Jeton büyük modelin hacim karşılaştırması

Büyük ölçekli eğitimin sermaye yoğunluğu ve yüksek bant genişliği gereksinimleri nedeniyle, birleşik süper kümeler en iyi seçenek olmaya devam ediyor. Teknoloji devlerine en iyi performans gösteren kapalı kaynaklı modelleri sağlıyor. Şirketler, bu modelleri tekel benzeri kârlarla kiraya vermeyi ve elde edilen geliri her bir sonraki ürün nesline yatırmayı planlıyor.

Ancak, AI alanındaki hendeğin Web2 ağ etkisinden daha sığ olduğu ve özellikle Meta'nın yakıp yıkma politikası benimseyip Llama 3.1 gibi açık kaynaklı, performansı SOTA seviyelerine ulaşan, son teknoloji modeller geliştirmek için onlarca milyar dolar yatırım yapmasıyla, önde gelen son teknoloji modellerin alana kıyasla hızla değer kaybettiği ortaya çıkıyor.

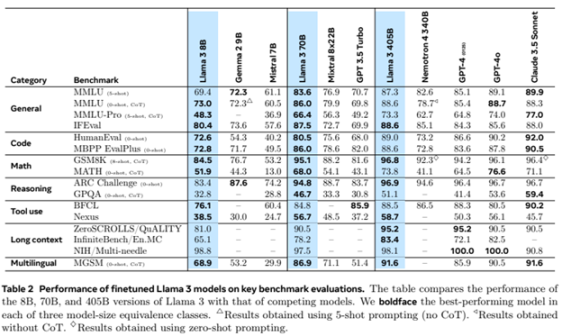

Llama 3 Büyük Model Derecelendirmesi

Bu noktada, düşük gecikmeli merkezi olmayan eğitim yöntemleri üzerine ortaya çıkan araştırmalarla birleştirildiğinde, bu durum (parçalarını) en son teknoloji iş modellerinin meta haline getirebilir; akıllı telefon fiyatları düştükçe, rekabet (en azından kısmen) donanım süper kümelerinden (teknoloji devlerini kayırır) yazılım inovasyonuna (açık kaynak/kripto para birimlerini hafifçe kayırır) kayabilir.

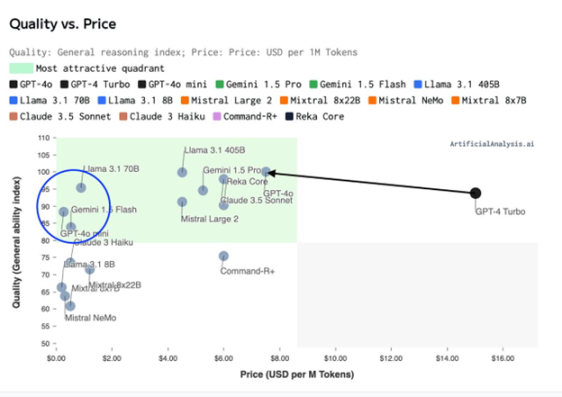

Yetenek Endeksi (Kalite) – Eğitim Fiyat Dağıtım Tablosu

"Uzmanların karışımı" mimarilerinin ve büyük model sentezi/yönlendirmesinin hesaplama verimliliği göz önüne alındığında, muhtemelen 3-5 dev modelden oluşan bir dünyayla değil, farklı maliyet/performans dengelerine sahip milyonlarca modelden oluşan bir dünyayla karşı karşıyayız. Birbirine geçmiş zeka ağı (kovan).

Bu durum, büyük bir koordinasyon sorunu yaratıyor: Blockchain ve kripto para teşvik mekanizmalarının bu sorunu çok iyi çözebilmesi gerekiyor.

Temel DeAI yatırım alanları

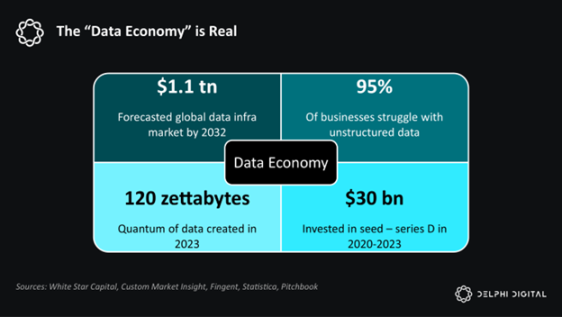

Yazılım dünyayı yiyor. Yapay zeka yazılımı yiyor. Ve yapay zeka temelde veri ve hesaplamadır.

Delphi bu yığındaki bileşenler konusunda iyimser:

AI x Crypto Stack'i basitleştirme

Altyapı

Yapay zekanın veri ve hesaplama ile güçlendirildiği göz önüne alındığında, DeAI altyapısı mümkün olduğunca verimli bir şekilde veri ve hesaplama sağlamaya odaklanır ve genellikle kripto para teşvikleri kullanır. Daha önce de belirttiğimiz gibi, bu rekabetin en zorlu kısmıdır, ancak nihai pazarın büyüklüğü göz önüne alındığında, aynı zamanda en ödüllendirici kısım da olabilir.

hesaplamak

Dağıtılmış eğitim protokolleri ve GPU pazarı şimdiye kadar gecikmelerle kısıtlandı, ancak devlerin entegre çözümlerinden dışarıda kalanlara daha düşük maliyetli, talep üzerine bilgi işlem hizmetleri sağlamak için heterojen donanımın potansiyelini koordine etmeyi umuyorlar. Gensyn, Prime Intellect ve Neuromesh gibi şirketler dağıtılmış eğitimin geliştirilmesini yönlendirirken, io.net, Akash, Aethir ve diğerleri gibi şirketler uç zekaya daha yakın düşük maliyetli çıkarım sağlıyor.

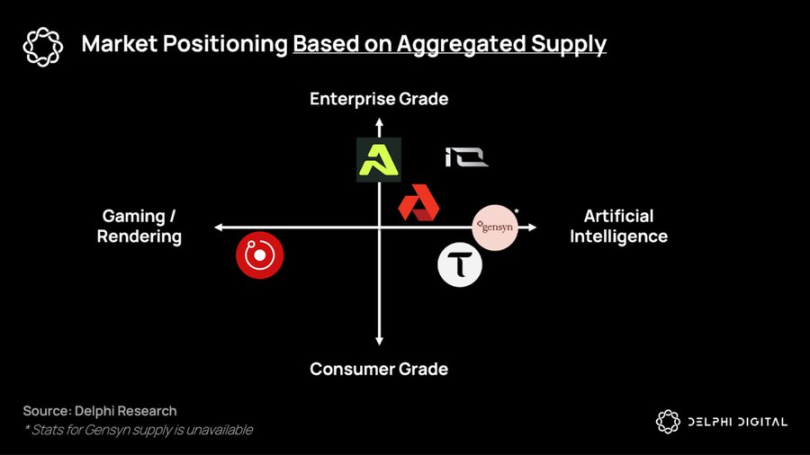

Toplam arza dayalı proje niş dağılımı

veri

Daha küçük ve daha uzmanlaşmış modellere dayalı, her yerde bulunan istihbaratın olduğu bir dünyada, veri varlıkları giderek daha değerli ve paraya dönüştürülebilir hale geliyor.

DePIN, bugüne kadar telekomünikasyon şirketleri gibi sermaye yoğun işletmelerden daha düşük maliyetle donanım ağları inşa etme yeteneği nedeniyle büyük ölçüde övüldü. Ancak DePIN için en büyük potansiyel pazar, zincir üstü akıllı sistemlere akacak yeni veri kümeleri türlerinin toplanması olacaktır: proxy protokolleri (daha sonra tartışılacaktır).

Dünyanın en büyük potansiyel pazarı olan emeğin yerini veri ve bilişimin aldığı bir dünyada, Yapay Zeka altyapısı teknik olmayan insanların üretim araçlarını ele geçirmesine ve gelecekteki ağ ekonomisine katkıda bulunmasına olanak sağlıyor.

ara yazılım

DeAI'nin nihai hedefi, verimli birleştirilebilir bilişim sağlamaktır. DeFi'nin büyük Lego'su gibi, DeAI, izinsiz birleştirilebilirlik yoluyla bugünün mutlak performans eksikliğini telafi eder, yazılım ve bilişim ilkellerinin açık bir ekosisteminin zamanla bileşik olmaya devam etmesini teşvik eder ve böylece (umarız) mevcut yazılım ve bilişim ilkellerini geride bırakır.

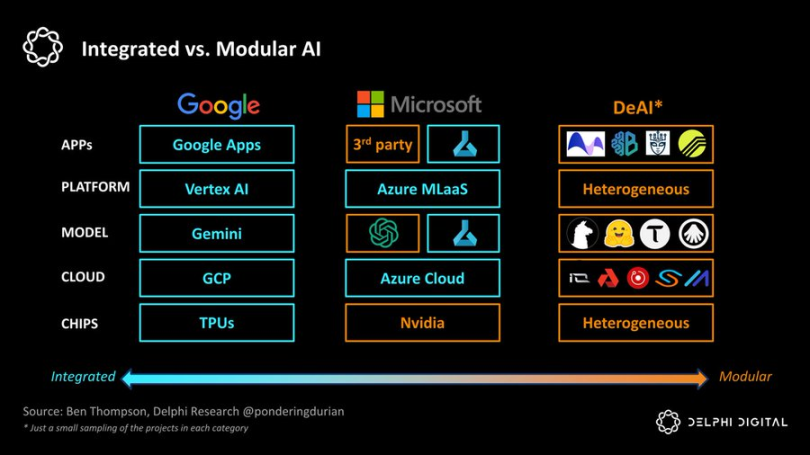

Google "entegrasyonun" en uç noktasıysa, DeAI "modülerleşmenin" en uç noktasını temsil eder. Clayton Christensen'in bize hatırlattığı gibi, gelişmekte olan endüstrilerde, entegre yaklaşımlar değer zincirindeki sürtünmeyi azaltarak liderlik etme eğilimindedir, ancak alan olgunlaştıkça, modüler değer zincirleri yığının her katmanında rekabeti ve maliyet verimliliğini artırarak zemin kazanır:

Entegre ve Modüler AI

Bu modüler vizyonu gerçekleştirmek için kritik öneme sahip olan birkaç kategoride oldukça iyimseriz:

yönlendirme

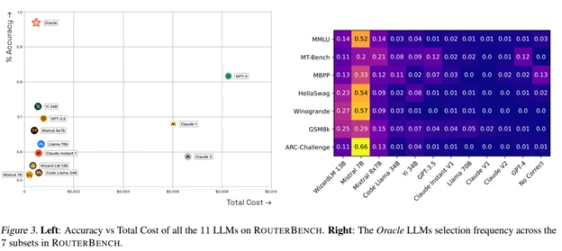

Parçalanmış zekanın olduğu bir dünyada, en iyi fiyata doğru modu ve zamanı nasıl seçebiliriz? Talep tarafı toplayıcıları her zaman değeri yakalamıştır (bkz. Toplama Teorisi) ve yönlendirme işlevleri, ağ zekasının olduğu bir dünyada performans ve maliyet arasındaki Pareto eğrisini optimize etmek için kritik öneme sahiptir:

Bittensor, ilk nesil ürünlerin ön saflarında yer alsa da, piyasaya çok sayıda kararlı rakip çıktı.

Allora, zaman içinde bağlam farkında ve kendini geliştiren bir şekilde farklı “konularda” farklı modeller arasında yarışmalar düzenleyerek, belirli koşullar altında tarihsel doğruluklarına dayanarak gelecekteki tahminleri bilgilendirir.

Morpheus, Web3 kullanım durumları için "talep tarafı yönlendirmesi" olmayı hedefliyor; esasen kullanıcının ilgili bağlamını anlayan ve sorguları DeFi'nin veya Web3'ün "birleştirilebilir hesaplama" altyapısının ortaya çıkan yapı taşları aracılığıyla verimli bir şekilde yönlendirebilen açık kaynaklı yerel bir proxy'ye sahip bir "Apple Intelligence".

Theoriq ve Autonolas gibi aracı birlikte çalışabilirlik protokolleri, modüler yönlendirmeyi en uç noktaya taşımayı ve esnek aracıların veya bileşenlerin birleştirilebilir, bileşik bir ekosisteminin tam anlamıyla olgun bir zincir üstü hizmete dönüşmesini sağlamayı amaçlamaktadır.

Özetle, istihbaratın hızla parçalandığı bir dünyada, arz tarafı ve talep tarafı toplayıcılar son derece güçlü olacak. Google, dünyanın bilgilerini dizinleyen $2 milyonluk bir şirketse, o zaman talep tarafı yönlendiricisinin kazananı — ister Apple, ister Google veya bir Web3 çözümü olsun — proxy istihbaratını dizinleyen şirket çok daha büyük bir ölçeğe sahip olacak.

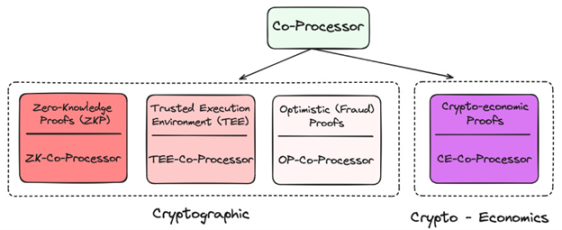

Yardımcı işlemci

Merkezi olmayan yapısı göz önüne alındığında, blockchain hem veri hem de hesaplama açısından oldukça sınırlıdır. Kullanıcıların ihtiyaç duyduğu hesaplama ve veri yoğunluklu AI uygulamalarını blockchain'e nasıl getirirsiniz? Yardımcı işlemciler aracılığıyla!

Kriptoda yardımcı işlemci uygulama katmanı

Hepsi, kullanılan temel verinin veya modelin geçerli bir oracle olduğunu doğrulamak için farklı teknikler sunar; bu, zincirdeki yeni güven varsayımlarını en aza indirirken yeteneklerini büyük ölçüde iyileştirir. Bugüne kadar, birçok proje zkML, opML, TeeML ve kripto-ekonomik yöntemleri kullanmıştır ve bunların avantajları ve dezavantajları çeşitlilik gösterir:

Yardımcı işlemci karşılaştırması

Daha yüksek bir düzeyde, yardımcı işlemciler akıllı sözleşmelerin zekası için kritik öneme sahiptir; daha kişiselleştirilmiş bir zincir üstü deneyim için sorgulanacak veya belirli bir çıkarımın doğru şekilde tamamlandığını doğrulayacak "veri ambarı" benzeri bir çözüm sağlar.

Super, Phala ve Marlin gibi TEE (Güvenilir Yürütme) ağları, pratiklikleri ve büyük ölçekli uygulamalara ev sahipliği yapabilmeleri nedeniyle son zamanlarda giderek daha popüler hale geliyor.

Genel olarak, yardımcı işlemciler yüksek kesinlikli ancak düşük performanslı blok zincirlerini yüksek performanslı ancak olasılıklı aracılarla birleştirmek için kritik öneme sahiptir. Yardımcı işlemciler olmadan, yapay zeka bu nesil blok zincirlerinde mevcut olmazdı.

Geliştirici Teşvikleri

Yapay zekada açık kaynaklı geliştirmenin en büyük sorunlarından biri, onu sürdürülebilir kılmak için teşviklerin eksikliğidir. Yapay zeka geliştirme oldukça sermaye yoğun bir süreçtir ve hem hesaplama hem de yapay zeka bilgi işinin fırsat maliyetleri çok yüksektir. Açık kaynaklı katkıları ödüllendirmek için doğru teşvikler olmadan, alan kaçınılmaz olarak hiper-kapitalizmin süper bilgisayarlarına kaybedecektir.

Duygudan Pluralis'e, Sahara AI'dan Mira'ya kadar bu projelerin amacı, bireylerden oluşan merkezi olmayan ağların, ağ istihbaratına katkıda bulunmasını sağlayan ve onlara uygun teşvikler sağlayan ağlar başlatmaktır.

İş modelinde telafi edilmesiyle, açık kaynaklı çözümlerin bileşik faiz oranı hız kazanacak; bu da geliştiricilere ve yapay zeka araştırmacılarına büyük teknoloji şirketlerine karşı küresel bir alternatif sunacak ve yarattıkları değere göre iyi bir ücret alma ihtimalini artıracaktır.

Bunu başarmak çok zor olsa da ve rekabet giderek kızışsa da, buradaki potansiyel pazar çok büyük.

GNN Modeli

Büyük dil modelleri büyük metin gövdelerindeki desenleri sınıflandırırken ve bir sonraki kelimeyi tahmin etmeyi öğrenirken, grafik sinir ağları (GNN'ler) grafik yapılandırılmış verileri işler, analiz eder ve onlardan öğrenir. Zincir üstü veriler çoğunlukla kullanıcılar ve akıllı sözleşmeler arasındaki karmaşık etkileşimlerden, başka bir deyişle bir grafikten oluştuğu için, GNN'ler zincir üstü AI kullanım durumlarını desteklemek için makul bir seçim gibi görünmektedir.

Projects such as Pond and RPS are trying to build basic models for web3, which may be applied in transactions, DeFi and even social use cases, such as:

-

Fiyat tahmini: Zincir üstü davranış modelleri fiyatları, otomatik ticaret stratejilerini ve duygu analizini öngörür

-

Yapay Zeka Finansı: Mevcut DeFi uygulamalarıyla entegrasyon, gelişmiş getiri stratejileri ve likidite kullanımı, daha iyi risk yönetimi/yönetişimi

-

Zincir üstü pazarlama: daha hedefli airdrop'lar/konumlandırma, zincir üstü davranışa dayalı öneri motoru

Bu modeller, benim de çok güvendiğim Space and Time, Subsquid, Covalent ve Hyperline gibi veri ambarı çözümlerinden yoğun olarak yararlanacak.

GNN, blok zincirinin büyük modelinin ve Web3 veri ambarının temel yardımcı araçlar olduğunu, yani Web3 için OLAP (Çevrimiçi Analitik İşleme) işlevleri sağladığını kanıtlayabilir.

başvuru

Bana göre, zincir üstü aracılar, kripto paraların bilindiği kullanıcı deneyimi sorununu çözmenin anahtarı olabilir; ancak daha da önemlisi, son on yılda Web3 altyapısına milyarlarca dolar yatırım yaptık; ancak talep tarafındaki kullanım acınası düzeyde.

Endişelenmeyin, Ajanlar geliyor...

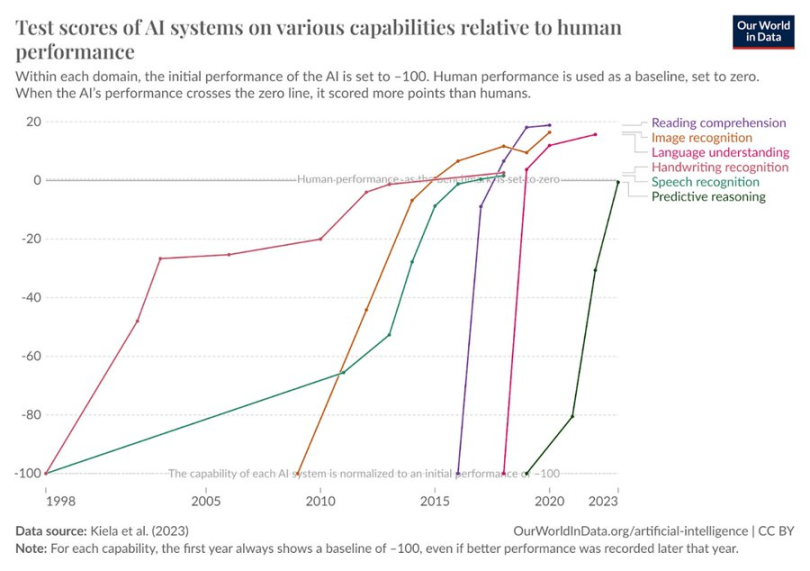

Yapay zekanın insan davranışının çeşitli boyutlarındaki test puanları arttı

Bu ajanların, daha karmaşık nihai hedeflere ulaşmak için ödemeler ve birleştirilebilir bilgi işlem genelinde açık, izinsiz altyapıyı kullanması mantıklı görünüyor. Yaklaşan ağ tabanlı akıllı ekonomide, ekonomik akışlar artık B -> B -> C değil, kullanıcı -> ajan -> bilgi işlem ağı -> ajan -> kullanıcı olabilir. Bu akışın nihai sonucu proxy protokolüdür. Uygulama veya hizmet odaklı işletmelerin sınırlı genel giderleri vardır ve çoğunlukla zincir üstü kaynaklar çalıştırırlar. Birleştirilebilir bir ağda son kullanıcıların (veya birbirlerinin) ihtiyaçlarını karşılamanın maliyeti, geleneksel işletmelere göre çok daha düşüktür. Web2'nin uygulama katmanı değerin çoğunu yakaladığı gibi, ben de DeAI'daki kalın proxy protokol teorisinin bir destekçisiyim. Zamanla, değer yakalama yığının üst katmanlarına kaymalıdır.

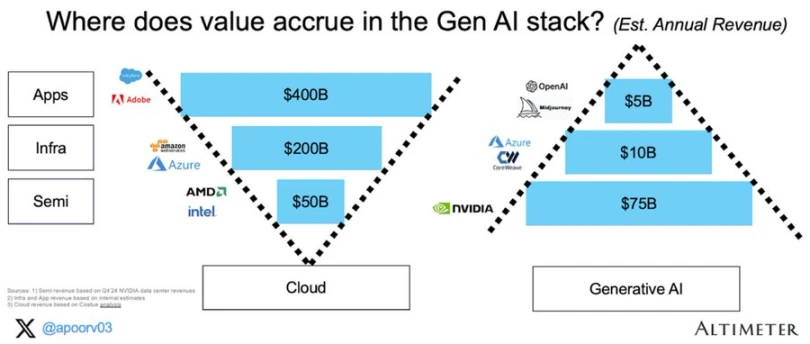

Üretken Yapay Zekada Değer Birikimi

Bir sonraki Google, Facebook ve Blackrock muhtemelen proxy protokolleri olacak ve bunları hayata geçirecek bileşenler halihazırda oluşturuluyor.

DeAI Son Oyun

Yapay zeka ekonomimizi değiştirecek. Bugün, piyasa bu değer yakalamanın Kuzey Amerika'nın batı kıyısındaki birkaç büyük şirketle sınırlı olacağını bekliyor. DeAI farklı bir vizyonu temsil ediyor. En küçük katkılar için bile ödüller ve tazminatlar ve daha kolektif sahiplik/yönetim içeren akıllı bir ağın açık, birleştirilebilir vizyonu.

DeAI'nin iddialarının bazıları abartılı olsa da ve birçok proje mevcut gerçek ivmelerinden önemli ölçüde daha yüksek fiyatlarda işlem görse de, fırsatın ölçeği önemlidir. Sabırlı ve öngörülü olanlar için, DeAI'nin gerçekten birleştirilebilir bilişim konusundaki nihai vizyonu, blok zincirinin kendisini haklı çıkarabilir.

Bu makale internetten alınmıştır: Delphi Digital: DeAI'nın fırsatları ve zorluklarının derinlemesine analizi

İlgili: Popüler Polymarket iyi bir tahmin aracı mı?

Felipe Montealegre'nin orijinal makalesi Orijinal çeviri: Luffy, Foresight News Bir keresinde bir arkadaşımla Robert Kennedy'nin Trump'ı desteklemesini tartışıyorduk ve katılımcılardan biri Trump'ın kazanma şansının Polymarket'in öngördüğü için 2% arttığını güvenle söyledi. Bu iyi bir gözlemdi çünkü olay hızlı bir şekilde gerçekleşti ve piyasayı hareketlendirecek başka bir haber yoktu. Polymarket verimli bir piyasa olsaydı, bu ifade savunulabilir görünürdü. Sorun şu ki Polymarket hala bir olayın olasılığındaki küçük değişiklikleri tahmin edemeyen verimsiz bir gelişen piyasadır (Verimli piyasaların çalışma şekli, çok sayıda yatırımcının olaylara dayanarak işlem yapmasıdır. Robert Kennedy'nin desteğinin Trump'ın şansını 10% artıracağını düşünüyorsanız, kaldıraçla satın alırsınız...