Orijinal yazar: Paul Timofeev

Orijinal çeviri: TechFlow

Temel Çıkarımlar

-

Makine öğrenimi ve derin öğrenmenin üretken yapay zeka geliştirme için yükselişiyle birlikte hesaplama kaynakları giderek daha popüler hale geldi; her ikisi de büyük hesaplama yoğunluklu iş yükleri gerektiriyor. Ancak, büyük şirketler ve hükümetler bu kaynakları biriktirdikçe, yeni kurulan şirketler ve bağımsız geliştiriciler artık pazarda GPU sıkıntısıyla karşı karşıya kalıyor ve bu da kaynakları aşırı pahalı ve/veya erişilemez hale getiriyor.

-

DePIN'leri hesaplamak, merkezi olmayan bir Pazar yeri GPU'lar gibi hesaplama kaynakları için, dünyadaki herkesin parasal ödüller karşılığında boşta duran kaynaklarını sunmasına izin vererek. Bu, yeterince hizmet alamayan GPU tüketicilerinin iş yükleri için ihtiyaç duydukları geliştirme kaynaklarını daha düşük maliyet ve ek yük karşılığında elde etmek için yeni tedarik kanallarına erişmelerine yardımcı olmayı amaçlamaktadır.

-

Hesaplamalı DePIN'ler, geleneksel merkezi hizmet sağlayıcılarıyla rekabette hâlâ birçok ekonomik ve teknik zorlukla karşı karşıyadır; bunların bir kısmı zamanla kendiliğinden çözülecekken, diğerleri yeni çözümler ve optimizasyonlar gerektirecektir.

Bilgisayar yeni petrol

Sanayi Devrimi'nden bu yana teknoloji, günlük yaşamın hemen hemen her yönünü etkileyerek veya tamamen dönüştürerek insanlığı benzeri görülmemiş bir hızla ileriye taşıdı. Bilgisayarlar sonunda araştırmacıların, akademisyenlerin ve bilgisayar mühendislerinin kolektif çabalarının doruk noktası olarak ortaya çıktı. Başlangıçta gelişmiş askeri operasyonlar için büyük ölçekli aritmetik görevleri çözmek üzere tasarlanan bilgisayarlar, modern yaşamın omurgasına dönüştü. Bilgisayarların insanlık üzerindeki etkisi benzeri görülmemiş bir oranda artmaya devam ederken, bu makinelere ve onları çalıştıran kaynaklara olan talep de artmakta ve mevcut arzı aşmaktadır. Bu da, çoğu geliştiricinin ve işletmenin temel kaynaklara erişemediği, günümüzün en dönüştürücü teknolojilerinden biri olan makine öğrenimi ve üretken yapay zekanın geliştirilmesini az sayıda iyi finanse edilmiş oyuncunun eline bıraktığı piyasa dinamikleri yaratmıştır. Aynı zamanda, büyük miktardaki boşta kalan bilgi işlem kaynakları, bilgi işlem arzı ve talebi arasındaki dengesizliği hafifletmeye yardımcı olmak için kazançlı bir fırsat sunarak, her iki taraf arasındaki koordinasyon mekanizmalarına olan ihtiyacı daha da artırmaktadır. Bu nedenle, blockchain teknolojisi ve dijital varlıklarla desteklenen merkezi olmayan sistemlerin, üretken yapay zeka ürün ve hizmetlerinin daha geniş, daha demokratik ve sorumlu bir şekilde geliştirilmesi için gerekli olduğuna inanıyoruz.

Bilgisayar kaynakları

Bilgisayar, bir bilgisayarın belirli bir girdiye dayalı olarak iyi tanımlanmış bir çıktı ürettiği herhangi bir etkinlik, uygulama veya iş yükü olarak tanımlanabilir. Sonuç olarak, şu anlama gelir: bilgisayarların hesaplama ve işlem gücü Bu makinelerin temel faydası, modern dünyanın birçok bölümünü çalıştıran ve üreten tam $1.1 trilyon gelir sadece geçtiğimiz yıl içinde.

Hesaplama kaynakları, hesaplama ve işlemeyi mümkün kılan çeşitli donanım ve yazılım bileşenlerini ifade eder. Etkinleştirdikleri uygulama ve işlev sayısı artmaya devam ettikçe, bu bileşenler giderek daha önemli hale geliyor ve insanların günlük yaşamlarında giderek daha fazla yer alıyor. Bu, ulusal güçler ve işletmeler arasında hayatta kalma aracı olarak mümkün olduğunca çok sayıda bu kaynağı biriktirme yarışına yol açtı. Bu, bu kaynakları sağlayan şirketlerin piyasa performansına yansıyor (örneğin, piyasa değeri son 5 yılda 3000%'den fazla artan Nvidia).

GPU

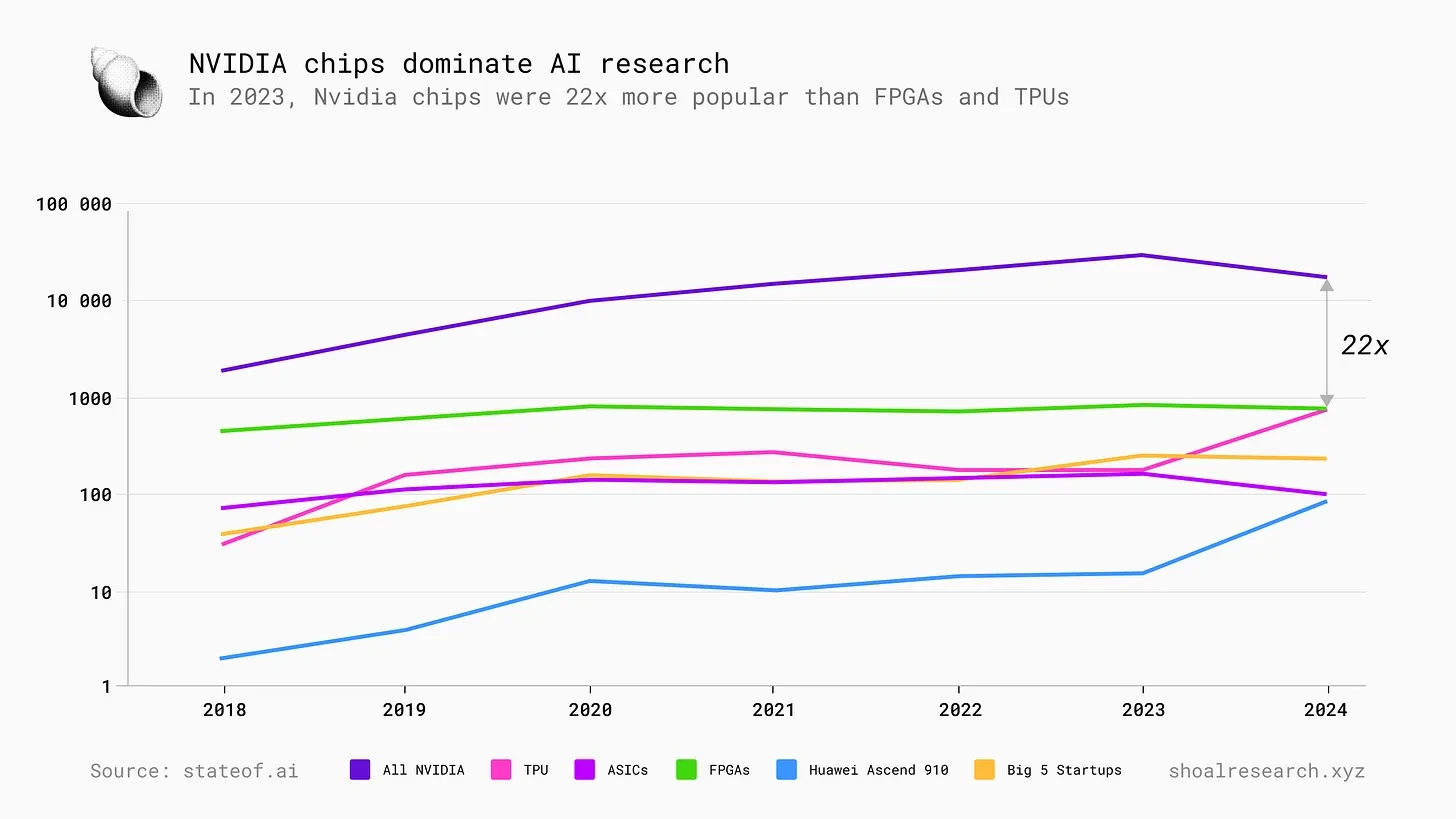

GPU'lar modern yüksek performanslı bilgi işlemdeki en önemli kaynaklardan biridir . Bir GPU'nun temel işlevi, paralel işleme yoluyla bilgisayar grafik iş yüklerini hızlandıran özel bir devre olarak hizmet etmektir. Başlangıçta oyun ve PC endüstrilerine hizmet eden GPU'lar, dünyamızın geleceğini şekillendiren birçok yeni teknolojiye (örneğin konsollar ve PC'ler, mobil cihazlar, bulut bilişim, IoT) hizmet etmek üzere evrimleşmiştir. Ancak, bu kaynaklara olan talep, makine öğrenimi ve yapay zekanın yükselişiyle özellikle daha da kötüleşmiştir - GPU'lar, hesaplamaları paralel olarak gerçekleştirerek ML ve AI işlemlerini hızlandırır ve böylece ortaya çıkan teknolojinin işlem gücünü ve yeteneklerini artırır.

Yapay zekanın yükselişi

Özünde, yapay zeka şu anlama gelir: bilgisayarların ve makinelerin insan zekasını ve problem çözme yeteneklerini taklit etmesini sağlamak Yapay zeka modelleri, sinir ağları olarak, birçok farklı veri parçasından oluşur. Model, bu veri parçaları arasındaki ilişkileri belirlemek ve öğrenmek için işlem gücüne ihtiyaç duyar ve daha sonra verilen girdilere dayalı çıktılar oluştururken bu ilişkilere başvurur.

Yaygın inanışın aksine, AI geliştirme ve üretimi yeni bir şey değil; 1967'de Frank Rosenblatt, deneme yanılma yoluyla "öğrenen" ilk sinir ağı tabanlı bilgisayar olan Mark 1 Perceptron'u inşa etti. Ek olarak, Yapay zekanın bugün bildiğimiz şekliyle gelişmesinin temelini oluşturan akademik araştırmaların çoğu 1990'ların sonu ve 2000'lerin başında yayınlandı ve sektör o tarihten bu yana büyümeye devam etti.

Ar-Ge çalışmalarının ötesinde, "dar" AI modelleri, bugün kullanımda olan çeşitli güçlü uygulamalarda zaten iş başındadır . Örnekler arasında Apple'ın Siri'si ve Amazon'un Alexa'sı gibi sosyal medya algoritmaları, özelleştirilmiş ürün önerileri ve daha fazlası yer alır. Özellikle, derin öğrenmenin yükselişi yapay üretken zekanın (AGI) gelişimini dönüştürdü. Derin öğrenme algoritmaları, daha ölçeklenebilir ve daha çok yönlü bir alternatif olarak makine öğrenimi uygulamalarından daha büyük veya "daha derin" sinir ağlarını kullanır. Üretken AI modelleri "eğitim verilerinin basitleştirilmiş bir temsilini kodlar ve benzer ancak aynı olmayan yeni çıktılar üretmek için buna başvurur."

Derin öğrenme, geliştiricilerin üretken yapay zeka modellerini görüntülere, konuşmaya ve diğer karmaşık veri türlerine ölçeklendirmesine olanak tanıyor. Modern zamanlarda en hızlı kullanıcı büyümesini gören ChatGPT gibi kilometre taşı niteliğindeki uygulamalar, üretken yapay zeka ve derin öğrenmeyle neler yapılabileceğinin henüz erken aşamaları.

Bunu akılda tuttuğumuzda, üretken yapay zeka geliştirmenin, önemli miktarda işlem gücü ve hesaplama gücü gerektiren, birden fazla hesaplama yoğunluklu iş yükünü içermesi şaşırtıcı değildir.

Buna göre derin öğrenme uygulama gereksinimlerinin üçlüsü Yapay zeka uygulamalarının geliştirilmesi, çeşitli temel iş yükleri tarafından kısıtlanmaktadır;

-

Eğitim – Modeller, verilen girdilere nasıl yanıt vereceklerini öğrenmek için büyük veri kümelerini işlemeli ve analiz etmelidir.

-

Ayarlama – Model, performansı ve kaliteyi iyileştirmek için çeşitli hiperparametrelerin ayarlandığı ve optimize edildiği bir dizi yinelemeli süreçten geçer.

-

Simülasyon – Bazı modeller, örneğin takviyeli öğrenme algoritmaları, dağıtım öncesinde test amaçlı bir dizi simülasyondan geçer.

Hesaplama krizi: Talep arzı aşıyor

Son birkaç on yılda, birçok teknolojik gelişme, hesaplama ve işlem gücüne olan talepte benzeri görülmemiş bir artışa yol açtı. Sonuç olarak, bugün GPU'lar gibi hesaplama kaynaklarına olan talep, mevcut arzı çok aşıyor ve etkili çözümler olmadan büyümeye devam edecek olan AI geliştirmede bir darboğaz yaratıyor.

Arz üzerindeki daha geniş kısıtlamalar, hem rekabet avantajı hem de modern küresel ekonomide hayatta kalma aracı olarak gerçek ihtiyaçlarının ötesinde GPU satın alan çok sayıda şirket tarafından daha da desteklenmektedir. Hesaplama sağlayıcıları genellikle uzun vadeli sermaye taahhütleri gerektiren sözleşme yapıları kullanır ve müşterilere ihtiyaçlarının gerektirdiğinden fazla tedarik sağlar.

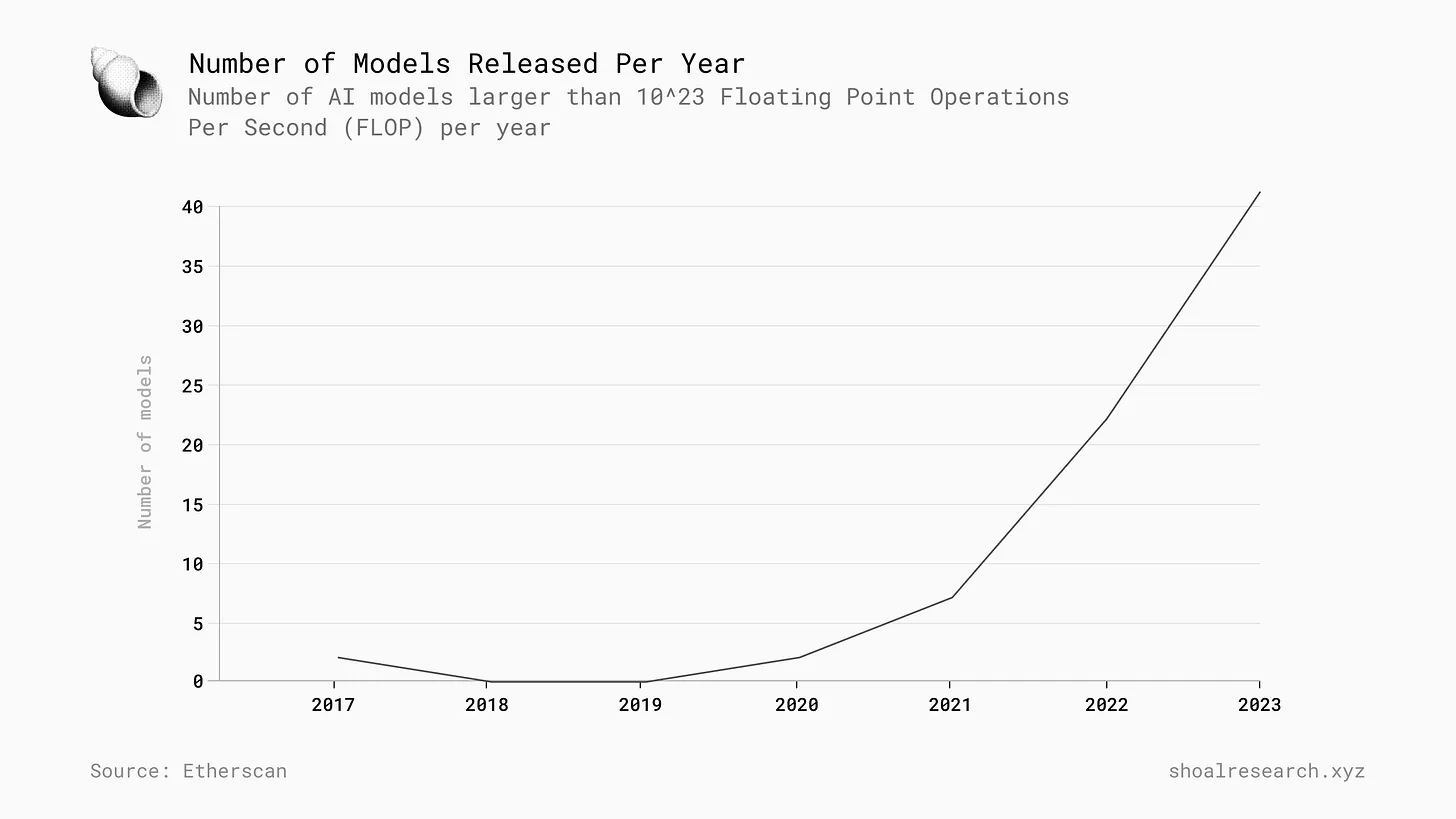

Epoch'un araştırması Yayımlanan hesaplama yoğunluklu yapay zeka modellerinin toplam sayısının hızla arttığını gösteriyor ve bu da bu teknolojileri yönlendiren kaynak gereksinimlerinin hızla artmaya devam edeceğini gösteriyor.

Yapay zeka modellerinin karmaşıklığı artmaya devam ettikçe, uygulama geliştiricilerinin hesaplama ve işlem gücü gereksinimleri de artacaktır. Buna karşılık, GPU'ların performansı ve bunların daha sonraki kullanılabilirliği giderek daha önemli bir rol oynayacaktır. Bu, Nvidia tarafından üretilenler gibi üst düzey GPU'lara olan talebin GPU'ları yapay zeka sektörünün "nadir toprak metalleri" veya "altını" olarak selamlamasıyla birlikte gerçekleşmeye başlamıştır.

Yapay zekanın hızla ticarileşmesi, kontrolün bir avuç teknoloji devine devredilmesi potansiyeline sahip. günümüzün sosyal medya sektörüne benzer şekilde, bu modellerin etik temelleri hakkında endişelere yol açtı. Dikkat çekici bir örnek, Google Gemini'yi çevreleyen son tartışmadır. Çeşitli istemlere verdiği tuhaf yanıtlar o zamanlar gerçek bir tehlike oluşturmasa da, olay bir avuç şirketin AI gelişimini domine etmesinin ve kontrol etmesinin içsel risklerini gösterdi.

Günümüzün teknoloji girişimleri, yapay zeka modellerini desteklemek için bilgi işlem kaynakları edinmede artan zorluklarla karşı karşıyadır. Bu uygulamalar, model dağıtılmadan önce birçok hesaplama açısından yoğun işlem gerçekleştirir. Daha küçük işletmeler için, çok sayıda GPU toplamak büyük ölçüde sürdürülemez bir çabadır ve AWS veya Google Cloud gibi geleneksel bulut bilişim hizmetleri kusursuz ve kullanışlı bir geliştirici deneyimi sunarken, sınırlı kapasiteleri nihayetinde birçok geliştirici için karşılanamaz hale getiren yüksek maliyetlere yol açar. Sonuç olarak, herkes $7 trilyon rakamına ulaşamaz donanım maliyetlerini karşılamak için.

Peki sebebi ne?

Nvidia bir kez tahmin edildi dünyada yapay zeka ve hızlandırılmış bilgi işlem için GPU kullanan 40.000'den fazla şirket ve 4 milyondan fazla kişiden oluşan bir geliştirici topluluğu bulunmaktadır. İleriye baktığımızda, Küresel AI pazarının 2023'te $515 milyardan 2032'de $2,74 trilyona, ortalama yıllık 20,4% büyüme oranına ulaşacak. Aynı zamanda, GPU pazarı 2032 yılında $400 milyara ulaşması ve yıllık ortalama 25% büyüme hızına ulaşması bekleniyor.

Ancak, AI devriminin ardından bilgi işlem kaynaklarının arzı ve talebi arasındaki artan dengesizlik, bir avuç iyi finanse edilmiş devin dönüştürücü teknolojilerin geliştirilmesine hakim olduğu oldukça ütopik bir gelecek yaratabilir. Bu nedenle, tüm yolların AI geliştiricilerinin ihtiyaçları ile mevcut kaynaklar arasındaki boşluğu kapatmaya yardımcı olmak için merkezi olmayan alternatif çözümlere çıktığına inanıyoruz.

DePIN'in rolü

DePIN’ler nelerdir?

DePIN, Messari araştırma ekibi tarafından ortaya atılan ve Dağıtık Fiziksel Altyapı Ağı anlamına gelen bir terimdir. Özellikle, dağıtık, kiraları toplayan ve erişimi kısıtlayan tek bir varlık olmadığı anlamına gelir. Fiziksel altyapı ise kullanılan "gerçek yaşam" fiziksel kaynakları ifade eder. Bir ağ, önceden belirlenmiş bir hedefe veya hedefler kümesine ulaşmak için koordinasyon içinde çalışan bir katılımcı grubunu ifade eder. Bugün, DePIN'lerin toplam piyasa değeri yaklaşık $28.3 milyar .

DePIN'lerin özünde, kaynak alıcılarını ve tedarikçilerini birbirine bağlayan ve herkesin tedarikçi olabileceği ve ağa yaptığı hizmetler ve değer katkısı için ödeme alabileceği merkezi olmayan bir pazar yeri yaratmak için fiziksel altyapı kaynaklarını blok zincirine bağlayan küresel bir düğüm ağı bulunur. Bu durumda, çeşitli yasal ve düzenleyici araçlar ve hizmet ücretleri aracılığıyla ağa erişimi kısıtlayan merkezi aracı, ilgili token sahipleri tarafından yönetilen akıllı sözleşmelerden ve koddan oluşan merkezi olmayan bir protokol ile değiştirilir.

DePIN'lerin değeri, geleneksel kaynak ağlarına ve hizmet sağlayıcılarına merkezi olmayan, erişilebilir, düşük maliyetli ve ölçeklenebilir bir alternatif sunmalarıdır. Merkezi olmayan pazarların belirli nihai hedeflere hizmet etmesini sağlarlar; mal ve hizmetlerin maliyeti piyasa dinamikleri tarafından belirlenir ve herkes her an katılabilir, bu da tedarikçi sayısındaki artış ve kar marjlarının en aza indirilmesi nedeniyle doğal olarak daha düşük birim maliyetlerle sonuçlanır.

Blockchain kullanımı, DePIN'lerin ağ katılımcılarının hizmetleri için uygun şekilde tazmin edilmesini sağlayan kripto-ekonomik teşvik sistemleri oluşturmasını ve temel değer sağlayıcılarını paydaşlara dönüştürmesini sağlar. Ancak, küçük kişisel ağları daha büyük, daha üretken sistemlere dönüştürerek elde edilen ağ etkilerinin, DePIN'lerin birçok faydasının gerçekleştirilmesi için önemli olduğunu belirtmek önemlidir. Ayrıca, token ödüllerinin ağ önyükleme mekanizmaları için güçlü bir araç olduğu kanıtlanmış olsa da, kullanıcı tutma ve uzun vadeli benimsemeye yardımcı olmak için sürdürülebilir teşvikler oluşturmak, daha geniş DePIN alanında önemli bir zorluk olmaya devam etmektedir.

DePIN'ler nasıl çalışır?

Merkezi olmayan bir bilgi işlem pazarını etkinleştirmede DePIN'lerin değerini daha iyi anlamak için, dahil olan farklı yapısal bileşenleri ve bunların merkezi olmayan bir kaynak ağı oluşturmak için nasıl birlikte çalıştıklarını tanımak önemlidir. Bir DePIN'in yapısını ve katılımcılarını ele alalım.

protokol

Temel bir temel katman blok zinciri ağının üzerine inşa edilmiş bir dizi akıllı sözleşme olan merkezi olmayan bir protokol, ağ katılımcıları arasında güvensiz etkileşimleri kolaylaştırmak için kullanılır. İdeal olarak, protokol, ağın uzun vadeli başarısına aktif olarak katkıda bulunmaya kararlı çeşitli paydaşlar tarafından yönetilmelidir. Bu paydaşlar daha sonra protokol belirtecinin kendi paylarını, DePIN'de önerilen değişiklikler ve geliştirmeler üzerinde oy kullanmak için kullanırlar. Dağıtılmış bir ağı başarılı bir şekilde koordine etmenin kendi başına büyük bir zorluk olduğu göz önüne alındığında, çekirdek ekip genellikle bu değişiklikleri başlangıçta uygulama gücünü elinde tutar ve ardından gücü merkezi olmayan özerk bir organizasyona (DAO) aktarır.

Ağ Katılımcıları

Bir kaynak ağının son kullanıcıları, o ağdaki en değerli katılımcılardır ve işlevlerine göre kategorilere ayrılabilirler.

-

Tedarikçi : Ağa DePIN yerel token'ları ile ödenen parasal ödüller karşılığında kaynak sağlayan bir birey veya kuruluş. Tedarikçiler, bir beyaz liste zincir içi süreci veya izinsiz bir süreci zorunlu kılabilen blok zinciri yerel protokolü aracılığıyla ağa "bağlanır". Tedarikçiler, token alarak, hisse senedi sahipliği bağlamındaki paydaşlara benzer şekilde ağda bir pay elde eder ve bu da onlara, talebi ve ağ değerini artırmaya yardımcı olacağına inandıkları teklifler gibi ağın çeşitli teklifleri ve gelişmeleri hakkında oy kullanma olanağı tanır ve böylece zamanla daha yüksek token fiyatları yaratır. Elbette, token alan tedarikçiler DePIN'leri pasif gelir biçimi olarak da kullanabilir ve token'ları aldıktan sonra bunları satabilir.

-

Tüketiciler : Bunlar, GPU'lar arayan AI girişimleri gibi DePIN tarafından sağlanan kaynakları aktif olarak arayan bireyler veya kuruluşlardır ve ekonomik denklemin talep tarafını temsil eder. Tüketiciler, DePIN'i geleneksel alternatifleri kullanmaya kıyasla kullanmanın gerçek avantajları varsa (daha düşük maliyetler ve genel gider gereksinimleri gibi) DePIN kullanmaya çekilir, bu da ağa yönelik organik talebi temsil eder. DePIN'ler genellikle tüketicilerin değer yaratmak ve istikrarlı bir nakit akışı sağlamak için kaynakları kendi yerel token'larında ödemesini gerektirir.

kaynak

DePIN'ler farklı pazarlara hizmet verebilir ve kaynakları tahsis etmek için farklı iş modelleri benimseyebilir. Blockworks iyi bir çerçeve sunuyor : özel donanım DePIN'leri satıcıların dağıtması için özel tescilli donanım sağlayan; bilgi işlem, depolama ve bant genişliği dahil ancak bunlarla sınırlı olmamak üzere mevcut boştaki kaynakların dağıtımına izin veren emtia donanım DePIN'leri.

Ekonomik Model

İdeal olarak yönetilen bir DePIN'de değer, tüketicilerin tedarikçi kaynakları için ödediği gelirden gelir. Ağ için devam eden talep, yerel token için devam eden talep anlamına gelir ve bu da tedarikçilerin ve token sahiplerinin ekonomik teşvikleriyle uyumludur. Erken aşamalarda sürdürülebilir organik talep oluşturmak çoğu girişim için bir zorluktur, bu nedenle DePIN'ler erken tedarikçileri teşvik etmek ve talep oluşturmanın ve dolayısıyla daha organik tedarik sağlamanın bir yolu olarak ağ tedarikini başlatmak için enflasyonist token teşvikleri sunar. Bu, risk sermayesi şirketlerinin Uber'in erken aşamalarında yolcu ücretlerini, sürücüleri daha fazla çekmek ve ağ etkilerini artırmak için ilk müşteri tabanını başlatmak amacıyla nasıl sübvanse ettiğine benzer.

DePIN'lerin token teşviklerini mümkün olduğunca stratejik bir şekilde yönetmeleri gerekir, çünkü bunlar ağın genel başarısında önemli bir rol oynar. Talep ve ağ geliri arttığında, token ihracı azaltılmalıdır. Tersine, talep ve gelir düştüğünde, token ihracı arzı teşvik etmek için tekrar kullanılmalıdır.

Başarılı bir DePIN ağının neye benzediğini daha iyi açıklamak için, " DePIN Volanı,” DePIN'leri yönlendiren pozitif bir geri bildirim döngüsü. İşte bir özet:

-

DePIN, sağlayıcıları ağa kaynak sağlamaya ve tüketime açık bir temel arz seviyesi oluşturmaya teşvik etmek için enflasyonist token ödülleri dağıtır.

-

Tedarikçi sayısının artmaya başladığını varsayarsak, ağda rekabetçi bir dinamik oluşmaya başlar ve ağ tarafından sağlanan mal ve hizmetlerin genel kalitesini, mevcut pazar çözümlerinden daha üstün hizmetler sunana kadar iyileştirir ve böylece rekabet avantajı elde eder. Bu, merkezi olmayan sistemlerin geleneksel merkezi hizmet sağlayıcılarını geride bıraktığı anlamına gelir ki bu kolay bir iş değildir.

-

DePIN'e yönelik organik talep artmaya başlar ve tedarikçilere meşru nakit akışı sağlar. Bu, yatırımcılar ve tedarikçiler için ağa ve dolayısıyla token fiyatına olan talebi artırmaya devam etmek için zorlayıcı bir fırsat sunar.

-

Token fiyatındaki artış, tedarikçiler için geliri artırır, daha fazla tedarikçiyi çeker ve çarkın yeniden dönmesini sağlar.

Bu çerçeve, ikna edici bir büyüme stratejisi sunmaktadır; ancak bunun büyük ölçüde teorik olduğunu ve ağın sağladığı kaynakların sürekli olarak rekabet açısından cazip olduğunu varsaydığını belirtmek önemlidir.

DePIN'lerin hesaplanması

Merkezi olmayan bilgi işlem pazarı, tüketicilerin mal ve hizmetleri doğrudan çevrimiçi platformlar aracılığıyla diğer tüketicilerle paylaştığı eşler arası bir ekonomik sistem olan "paylaşım ekonomisi" adlı daha geniş bir hareketin parçasıdır. eBay gibi şirketler tarafından öncülük edilen ve bugün Airbnb ve Uber gibi şirketler tarafından domine edilen bu model, bir sonraki nesil dönüştürücü teknolojiler küresel pazarlara yayıldıkça nihayetinde bozulmaya hazırdır. 2023'te $150 milyar olarak değerlendirilecek ve 2031 yılına kadar yaklaşık $800 milyara ulaşması bekleniyor Paylaşım ekonomisi, tüketici davranışlarında DePIN'lerin hem fayda sağlayacağına hem de önemli bir rol oynayacağına inandığımız daha geniş bir eğilimi göstermektedir.

Esas

Compute DePIN'ler, tedarikçileri ve alıcıları merkezi olmayan pazar yerleri aracılığıyla birbirine bağlayarak bilgi işlem kaynaklarının tahsisini kolaylaştıran eşler arası ağlardır. Bu ağların temel farklılaştırıcılarından biri, bugün birçok kişinin elinde bulunan emtia donanım kaynaklarına odaklanmalarıdır. Daha önce tartıştığımız gibi, derin öğrenme ve üretken yapay zekanın ortaya çıkışı, kaynak yoğun iş yükleri nedeniyle işlem gücüne olan talebin artmasına yol açtı ve yapay zeka geliştirme için kritik kaynaklara erişimde darboğazlar yarattı. Basitçe söylemek gerekirse, merkezi olmayan bilgi işlem pazar yerleri, yeni bir tedarik akışı oluşturarak bu darboğazları hafifletmeyi hedefliyor; bu, dünyanın dört bir yanına yayılan ve herkesin katılabileceği bir akış.

DePIN Hesaplamada, herhangi bir birey veya kuruluş boş kaynaklarını istediği zaman ödünç verebilir ve uygun tazminat alabilir. Aynı zamanda, herhangi bir birey veya kuruluş, mevcut piyasa ürünlerinden daha düşük bir maliyetle ve daha fazla esneklikle küresel izinsiz ağdan gerekli kaynakları elde edebilir. Bu nedenle, DePIN Hesaplamadaki katılımcıları basit bir ekonomik çerçeve üzerinden tanımlayabiliriz:

-

Tedarikçi : Bilgisayar kaynaklarına sahip olan ve bunları sübvansiyon karşılığında ödünç vermeye veya satmaya istekli olan kişi veya kuruluş.

-

Talep eden :Bilgi işlem kaynaklarına ihtiyaç duyan ve bunun için bir bedel ödemeye istekli olan kişi veya kuruluş.

DePIN Hesaplamanın Temel Faydaları

DePIN'leri hesaplamak, onları merkezi hizmet sağlayıcılarına ve pazar yerlerine çekici bir alternatif haline getiren bir dizi avantaj sunar. Öncelikle, izinsiz, sınır ötesi pazar katılımını etkinleştirmek yeni bir tedarik akışının kilidini açarak, hesaplama yoğunluklu iş yükleri için gereken kritik kaynak miktarını artırır. Hesaplama DePIN'leri çoğu insanın zaten sahip olduğu donanım kaynaklarına odaklanır; oyun bilgisayarı olan herkesin kiralanabilen bir GPU'su vardır. Bu, bir sonraki nesil mal ve hizmetlerin oluşturulmasına katılabilen geliştiricilerin ve ekiplerin yelpazesini genişletir ve dünya çapında daha fazla insana fayda sağlar.

Daha da ileriye bakıldığında, DePIN'leri destekleyen blok zinciri altyapısı, eşler arası işlemler için gereken mikro ödemeleri kolaylaştırmak için verimli ve ölçeklenebilir bir ödeme yolu sağlıyor. Kripto-yerel finansal varlıklar (token'lar), talep tarafındaki katılımcıların tedarikçilere ödeme yapmak için kullandığı paylaşılan bir değer birimi sağlar ve günümüzün giderek küreselleşen ekonomisiyle tutarlı bir dağıtım mekanizması aracılığıyla ekonomik teşvikleri hizalar. Daha önce kurduğumuz DePIN çarkına atıfta bulunarak, ekonomik teşvikleri stratejik olarak yönetmek, DePIN'in ağ etkilerini (hem arz hem de talep tarafında) artırmak için çok faydalıdır ve bu da tedarikçiler arasındaki rekabeti artırır. Bu dinamik, hizmet kalitesini iyileştirirken birim maliyetleri azaltır ve DePIN için sürdürülebilir bir rekabet avantajı yaratır ve tedarikçiler token sahipleri ve temel değer sağlayıcıları olarak bundan faydalanabilir.

DePIN'ler, kaynaklara talep üzerine erişilebildiği ve ödeme yapılabildiği esnek kullanıcı deneyimi sağlamayı amaçladıkları için bulut bilişim hizmet sağlayıcılarına benzer. Grandview Araştırma S tahmin etmek , küresel bulut bilişim pazarı büyüklüğünün 2030 yılına kadar 21,2%'lik yıllık bileşik büyüme oranıyla $2,4 trilyonun üzerine çıkması bekleniyor ve bu, bilgi işlem kaynaklarına olan talebin gelecekteki büyümesi bağlamında bu tür iş modellerinin uygulanabilirliğini gösteriyor. Modern bulut bilişim platformları, istemci cihazları ve sunucular arasındaki tüm iletişimleri yönetmek için merkezi sunucuları kullanarak, işlemlerinde tek bir arıza noktası oluşturuyor. Ancak, blok zincirinin üzerine inşa etmek, DePIN'lerin geleneksel hizmet sağlayıcılarından daha fazla sansür direnci ve dayanıklılık sağlamasını sağlar. Tek bir kuruluşa veya varlığa (örneğin merkezi bir bulut hizmet sağlayıcısı) saldırmak, tüm temel kaynak ağını tehlikeye atacaktır ve DePIN'ler dağıtılmış yapıları sayesinde bu tür olaylara direnmek üzere tasarlanmıştır. İlk olarak, blok zincirinin kendisi, merkezi ağ otoritesine direnmek üzere tasarlanmış, özel düğümlerden oluşan küresel olarak dağıtılmış bir ağdır. Ek olarak, DePIN'leri hesaplamak, yasal ve düzenleyici engelleri aşarak izinsiz ağ katılımına da olanak tanır. Token dağıtımının niteliğine bağlı olarak DePIN'ler, tek bir varlığın tüm ağı aniden kapatma olasılığını ortadan kaldırmak için protokolde önerilen değişiklikler ve geliştirmeler üzerinde oy kullanmak üzere adil bir oylama sürecini benimseyebilir.

Hesaplamalı DePIN'lerin mevcut durumu

Ağ Oluşturma

Render Network, GPU alıcılarını ve satıcılarını merkezi olmayan bir bilgi işlem pazarı aracılığıyla birbirine bağlayan ve işlemlerin yerel belirteci aracılığıyla gerçekleştirildiği bir hesaplamalı DePIN'dir. Render'ın GPU pazarı iki önemli tarafı içerir: işlem gücüne erişim arayan yaratıcılar ve yaratıcılara yerel Render belirteçleriyle tazminat karşılığında boştaki GPU'ları kiralayan düğüm operatörleri. Düğüm operatörleri bir itibar sistemine göre sıralanır ve yaratıcılar çok kademeli bir fiyatlandırma sisteminden GPU'ları seçebilir. Proof-of-Render (POR) fikir birliği algoritması işlemleri koordine eder ve düğüm operatörleri bilgi işlem kaynaklarını (GPU'lar) görevleri işlemek, yani grafik işleme işi için taahhüt eder. Bir görev tamamlandığında, POR algoritması düğüm operatörlerinin durumunu, görevin kalitesine göre itibar puanındaki bir değişiklik dahil olmak üzere günceller. Render'ın blok zinciri altyapısı, iş için ödemeyi kolaylaştırır ve tedarikçilerin ve alıcıların ağ belirteci aracılığıyla işlem yapmaları için şeffaf ve verimli bir ödeme yolu sağlar.

Render Network başlangıçta şu kişi tarafından tasarlandı: Jules Urbach 2009 yılında ağ Ethereum'da yayına girdi ( RNDR ) Eylül 2020'de Solana'ya taşındı ( İŞLEMEK ) yaklaşık üç yıl sonra ağ performansını iyileştirmek ve işletme maliyetlerini azaltmak için.

Bu yazının yazıldığı tarih itibarıyla, Render Network 33 milyona kadar görevi (işlenen kareler açısından) işledi ve başlangıcından bu yana toplam 5600 düğüme ulaştı. Yaklaşık 60 bin RENDER yok edildi, Düğüm operatörlerine iş kredilerinin dağıtımı sırasında gerçekleşen bir işlem.

G/Ç Ağı

Io Net, çok sayıda boşta duran bilgi işlem kaynağı ile bu kaynakların sağladığı işlem gücüne ihtiyaç duyan bireyler ve kuruluşlar arasında bir koordinasyon katmanı olarak Solana'nın üzerinde merkezi olmayan bir GPU ağı başlatıyor. Io Net'in benzersiz satış noktası, piyasadaki diğer DePIN'lerle doğrudan rekabet etmek yerine, çeşitli kaynaklardan (veri merkezleri, madenciler ve Render Network ve Filecoin gibi diğer DePIN'ler dahil) GPU'ları bir araya getirmesi ve tescilli bir DePIN olan GPU'ların İnterneti'nden (IoG) yararlanarak işlemleri koordine etmesi ve piyasa katılımcılarının teşviklerini hizalamasıdır. Io Net müşterileri, işlemci türünü, konumu, iletişim hızını, uyumluluğu ve hizmet süresini seçerek IO Cloud'daki iş yükü kümelerini özelleştirebilir. Tersine, desteklenen bir GPU modeline (12 GB RAM, 256 GB SSD) sahip olan herkes, boşta duran bilgi işlem kaynaklarını ağa ödünç vererek bir IO Çalışanı olarak katılabilir. Hizmet ödemeleri şu anda itibari para ve USDC ile yapılırken, ağ yakında yerel $IO token'ında ödemeleri de destekleyecek. Kaynakların fiyatı, arz ve talebin yanı sıra çeşitli GPU özellikleri ve yapılandırma algoritmaları tarafından belirlenir. Io Net'in nihai hedefi, modern bulut hizmeti sağlayıcılarından daha düşük maliyetler ve daha yüksek hizmet kalitesi sunarak tercih edilen GPU pazar yeri olmaktır.

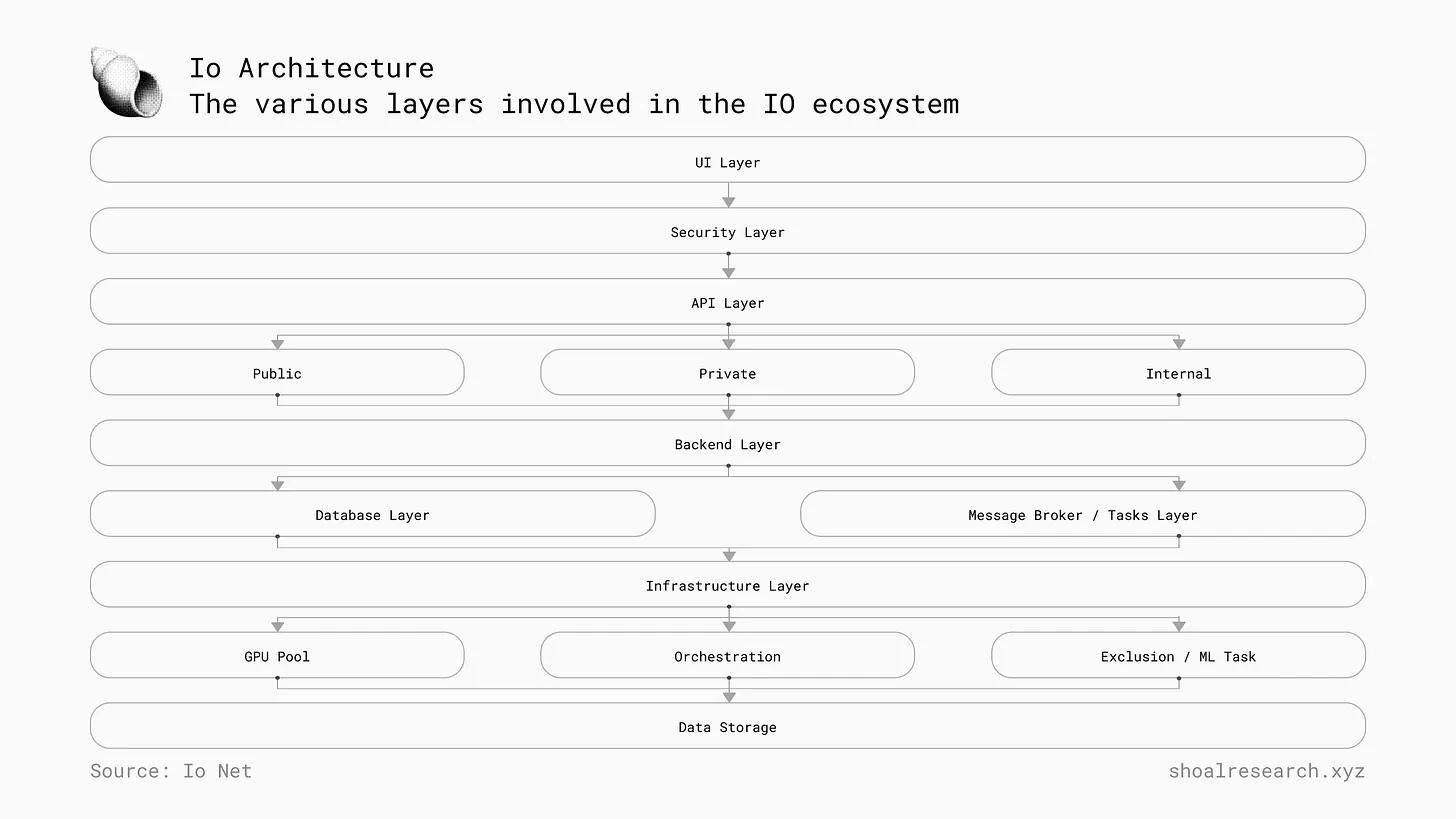

Çok katmanlı IO mimarisi aşağıdaki gibi haritalanabilir:

-

UI katmanı – kamuya açık web sitesi, müşteri alanı ve Çalışanlar alanından oluşur.

-

Güvenlik Katmanı – Bu katman, ağ koruması için güvenlik duvarlarından, kullanıcı doğrulaması için kimlik doğrulama hizmetlerinden ve izleme etkinlikleri için günlükleme hizmetlerinden oluşur.

-

API Katmanı – Bu katman, bir iletişim katmanı görevi görür ve genel bir API'den (web sitesi için), özel bir API'den (Çalışanlar için) ve dahili bir API'den (küme yönetimi, analiz ve izleme raporları için) oluşur.

-

Arka uç katmanı – Arka uç katmanı, Çalışanları, küme/GPU işlemlerini, müşteri etkileşimlerini, faturalandırma ve kullanım izlemeyi, analitiği ve otomatik ölçeklemeyi yönetir.

-

Veritabanı Katmanı − Bu katman sistemin veri deposudur ve birincil depolama (yapılandırılmış veriler için) ve önbelleği (sık erişilen geçici veriler için) kullanır.

-

Mesaj Aracısı ve Görev Katmanı − Bu katman, asenkron iletişimi ve görev yönetimini kolaylaştırır.

-

Altyapı katmanı – Bu katman GPU havuzlarını, orkestrasyon araçlarını içerir ve görev dağıtımını yönetir.

Güncel İstatistikler/Yol Haritası

-

Bu yazının yazıldığı tarih itibariyle:

-

Toplam ağ geliri – $1.08m

-

Toplam Bilgisayar Saati – 837,6 bin saat

-

Toplam kümeye hazır GPU'lar – 20,4K

-

Toplam Küme Hazır CPU – 5.6k

-

Toplam zincir üstü işlem sayısı – 1,67 milyon

-

Toplam çıkarım süreleri – 335,7k

-

Toplam oluşturulan kümeler – 15,1k

(Veriler Io Net gezgini )

Aethir

Aethir, hesaplama yoğun alanlarda ve uygulamalarda yüksek performanslı bilgi işlem kaynaklarının paylaşımını kolaylaştıran bir bulut bilişim DePIN'idir. Kaynak havuzlamasından yararlanarak önemli ölçüde azaltılmış maliyetlerle küresel GPU tahsisi ve dağıtılmış kaynak sahipliği yoluyla merkezi olmayan sahiplik elde eder. Aethir, yüksek performanslı iş yükleri için tasarlanmıştır ve oyun ve AI model eğitimi ve çıkarımı gibi sektörler için uygundur. GPU kümelerini tek bir ağda birleştirerek Aethir, küme boyutunu artırmak ve böylece ağında sağlanan hizmetlerin genel performansını ve güvenilirliğini iyileştirmek için tasarlanmıştır.

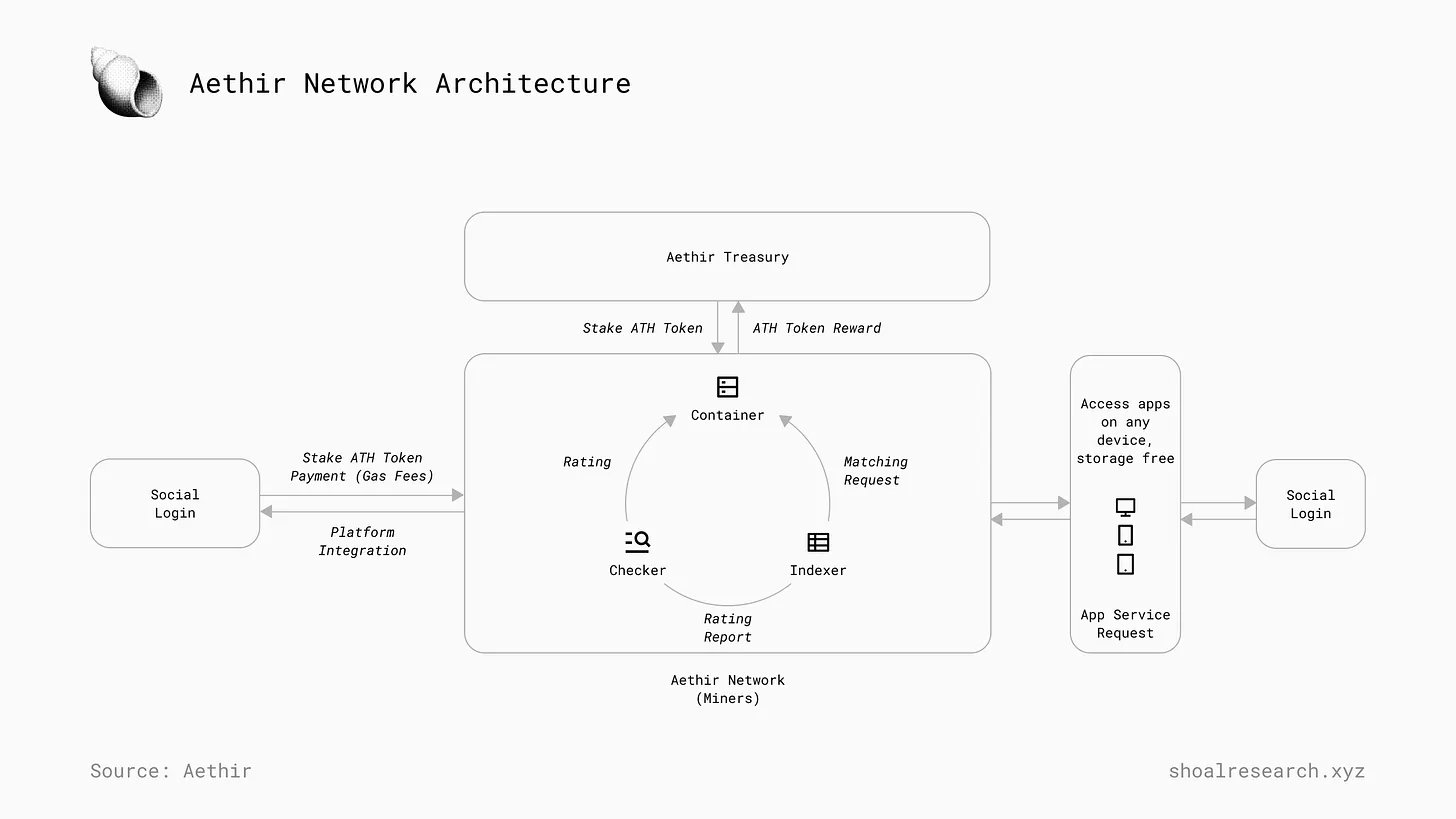

Aethir Network, madencilerden, geliştiricilerden, kullanıcılardan, token sahiplerinden ve Aethir DAO'dan oluşan merkezi olmayan bir ekonomidir. Ağın başarılı bir şekilde çalışmasını sağlayan üç temel rol, kapsayıcılar, dizinleyiciler ve denetçilerdir. Kapsayıcılar, işlemleri doğrulama ve dijital içeriği gerçek zamanlı olarak sunma gibi ağ canlılığını koruyan önemli işlemleri gerçekleştiren ağın çekirdek düğümleridir. Denetçiler, GPU tüketicileri için güvenilir ve verimli bir çalışma sağlamak amacıyla kapsayıcıların performansını ve hizmet kalitesini sürekli olarak izleyerek kalite güvence personeli olarak görev yapar. Dizinleyiciler, kullanıcılar ve en iyi mevcut kapsayıcılar arasında eşleştirici olarak görev yapar. Bu yapının temelinde, Aethir Network'te yerel $ATH token'larında mal ve hizmetler için ödeme yapmak üzere merkezi olmayan bir yerleşim katmanı sağlayan Arbitrum Layer 2 blok zinciri bulunur.

İşleme Kanıtı

Aethir ağındaki düğümlerin iki temel işlevi vardır: kapasite kanıtının sunulması , bu işçi düğümlerinden oluşan bir grubun her 15 dakikada bir rastgele seçilerek işlemlerin doğrulanması; ve işin kanıtını sunmak , kullanıcıların en iyi şekilde hizmet almasını sağlamak için ağ performansını yakından izleyerek, kaynakları talebe ve coğrafyaya göre ayarlayarak. Madenci ödülleri, Aethir ağında düğümler çalıştıran katılımcılara dağıtılır, ödünç verdikleri bilgi işlem kaynaklarının değerine göre hesaplanır ve ödüller yerel $ATH token'ında ödenir.

Nosana

Nosana, Solana üzerine kurulu merkezi olmayan bir GPU ağıdır. Nosana, herkesin boşta kalan bilgi işlem kaynaklarına katkıda bulunmasına ve bunu yaptığı için $NOS token'ları şeklinde ödüllendirilmesine olanak tanır. DePIN, geleneksel bulut çözümlerinin yükü olmadan karmaşık AI iş yüklerini çalıştırmak için kullanılabilen GPU'ların uygun maliyetli tahsisini kolaylaştırır. Herkes boştaki GPU'larını ödünç vererek bir Nosana düğümü çalıştırabilir ve ağa sağladıkları GPU gücüne orantılı token ödülleri alabilir.

Ağ, bilgi işlem kaynaklarını tahsis eden iki tarafı birbirine bağlar: bilgi işlem kaynaklarına erişim arayan kullanıcılar ve bilgi işlem kaynakları sağlayan düğüm operatörleri. Önemli protokol kararları ve yükseltmeler NOS token sahipleri tarafından oylanır ve Nosana DAO tarafından yönetilir.

Nosana'nın gelecek planları için kapsamlı bir yol haritası var - Galactica (v1.0 - H1/H2 2024) ana ağı başlatacak, CLI ve SDK'yı yayınlayacak ve tüketici GPU'ları için kapsayıcı düğümler aracılığıyla ağı genişletmeye odaklanacak. Triangulum (v1.X - H2 2024) PyTorch, HuggingFace ve TensorFlow gibi önemli makine öğrenimi protokollerini ve bağlayıcılarını entegre edecek. Whirlpool (v1.X -H1 2025) AMD, Intel ve Apple Silicon'dan çeşitli GPU'lar için desteği genişletecek. Sombrero (v1.X - H2 2025) orta ve büyük işletmeler, itibari ödemeler, faturalama ve ekip özellikleri için destek ekleyecek.

Akaş

Akash Network, Cosmos SDK üzerine kurulu, herkesin izinsiz katılmasına ve katkıda bulunmasına izin veren, merkezi olmayan bir bulut bilişim pazarı oluşturan açık kaynaklı bir hisse kanıtı ağıdır. $AKT token'ları, ağı güvence altına almak, kaynak ödemelerini kolaylaştırmak ve ağ katılımcıları arasındaki ekonomik davranışı koordine etmek için kullanılır. Akash Network birkaç temel bileşenden oluşur:

-

Blockchain katmanı Konsensüs sağlamak için Tendermint Core ve Cosmos SDK'yı kullanır.

-

Uygulama katmanı , dağıtım ve kaynak tahsisini yönetir.

-

Sağlayıcı katmanı kaynakları, teklifleri ve kullanıcı uygulama dağıtımını yönetir.

-

Kullanıcı Katmanı Kullanıcıların Akash Ağı ile etkileşime girmesini, kaynakları yönetmesini ve CLI, konsol ve gösterge tablosunu kullanarak uygulama durumunu izlemesini sağlar.

Ağ başlangıçta depolama ve CPU kiralama hizmetlerine odaklanmıştı ve AI eğitimi ve çıkarım iş yüklerine olan talep arttıkça, ağ hizmetlerini GPU kiralama ve tahsisini de içerecek şekilde genişletti ve bu ihtiyaçlara AkashML platformu aracılığıyla yanıt verdi. AkashML, müşterilerin (kiracılar olarak adlandırılır) istedikleri GPU fiyatlarını sunduğu ve hesaplama sağlayıcılarının (sağlayıcılar olarak adlandırılır) talep edilen GPU'ları tedarik etmek için rekabet ettiği bir ters açık artırma sistemi kullanır.

Bu yazının yazıldığı tarih itibariyle, Akash blok zinciri 12,9 milyondan fazla işlem tamamladı, $535.000'den fazla bilgi işlem kaynaklarına erişim için harcandı ve 189.000'den fazla benzersiz dağıtım kiralandı.

Onur Ödülleri

Hesaplamalı DePIN alanı hala gelişmektedir ve birçok ekip pazara yenilikçi ve verimli çözümler sunmak için rekabet etmektedir. Daha fazla araştırmaya değer diğer örnekler şunlardır: Abartılı Yapay zeka geliştirme için kaynak havuzlamasına yönelik iş birlikçi bir açık erişim platformu oluşturan ve Exabit'ler , hesaplamalı madencilerin desteklediği dağıtılmış bir bilgi işlem gücü ağı kuruyor.

Önemli hususlar ve geleceğe yönelik beklentiler

Artık DePIN hesaplamasının temel prensiplerini anladığımıza ve şu anda yürütülmekte olan birkaç tamamlayıcı vaka çalışmasını incelediğimize göre, bu merkezi olmayan ağların hem avantajları hem de dezavantajları dahil olmak üzere etkisini dikkate almak önemlidir.

meydan okumak

Ölçekte dağıtılmış ağlar oluşturmak genellikle performans, güvenlik ve dayanıklılıkta ödünler gerektirir. Örneğin, bir AI modelini küresel olarak dağıtılmış bir ticari donanım ağında eğitmek, onu merkezi bir hizmet sağlayıcısında eğitmekten çok daha az maliyet etkin ve zaman açısından verimli olabilir. Daha önce de belirttiğimiz gibi, AI modelleri ve iş yükleri giderek daha karmaşık hale geliyor ve ticari GPU'lar yerine daha yüksek performanslı GPU'lar gerektiriyor.

Bu büyük şirketlerin neden büyük miktarlarda yüksek performanslı GPU'ları istiflediğini ve herkesin boştaki GPU'ları ödünç verebileceği izinsiz bir pazar kurarak GPU kıtlığı sorununu çözmeyi amaçlayan hesaplamalı DePIN'lerin karşılaştığı doğal bir zorluktur (merkezi olmayan yapay zeka protokollerinin karşılaştığı zorluklar hakkında daha fazla bilgi için bu tweet'e bakın) ) . Protokoller bu sorunu iki temel yolla çözebilir: birincisi, ağa katkıda bulunmak isteyen GPU sağlayıcıları için temel gereksinimleri belirlemek ve diğeri de daha genel bir başarı elde etmek için ağa sağlanan hesaplama kaynaklarını bir araya getirmektir. Yine de, bu modelin kurulması, donanım sağlayıcılarıyla (örneğin Nvidia) doğrudan anlaşmak için daha fazla fon ayırabilen merkezi hizmet sağlayıcılarına kıyasla doğası gereği zordur. Bu, DePIN'lerin ilerledikçe dikkate alması gereken bir şeydir. Merkezi olmayan bir protokolün yeterince büyük bir fonu varsa, DAO fonların bir kısmını merkezi olmayan bir şekilde yönetilebilen ve emtia GPU'larından daha yüksek bir fiyata ödünç verilebilen yüksek performanslı GPU'lar satın almak için tahsis etmeye oy verebilir.

Hesaplamalı DePIN'lere özgü bir diğer zorluk da uygun kaynak kullanımını yönetmektir . Çoğu hesaplamalı DePIN, erken aşamalarında, tıpkı bugün birçok girişimin karşılaştığı gibi, yapısal yetersiz talep sorunuyla karşı karşıya kalacaktır. Genel olarak, DePIN'ler için zorluk, asgari uygulanabilir ürün kalitesini elde etmek için erken aşamada yeterli arz oluşturmaktır. Arz olmadan, ağ sürdürülebilir talep üretemeyecek ve yoğun talep dönemlerinde müşterilerine hizmet veremeyecektir. Öte yandan, aşırı arz da bir sorundur. Belirli bir eşiğin üzerinde, daha fazla arz yalnızca ağ kullanımı tam kapasiteye yakın veya tam kapasitede olduğunda yardımcı olacaktır. Aksi takdirde, DePIN'ler arz için çok fazla ödeme yapma riskiyle karşı karşıya kalacak, bu da kaynakların yetersiz kullanılmasına neden olacak ve protokol tedarikçileri meşgul tutmak için token ihracını artırmadığı sürece tedarikçiler daha az gelir elde edecektir.

Geniş coğrafi kapsama alanı olmadan bir telekomünikasyon ağı işe yaramaz . Yolcular bir yolculuk için uzun süre beklemek zorundaysa taksi ağı işe yaramaz. Uzun bir süre boyunca kaynak sağlamak için insanlara ödeme yapmak zorundaysa bir DePIN işe yaramaz. Merkezi hizmet sağlayıcılar kaynak talebini tahmin edebilir ve kaynak tedarikini verimli bir şekilde yönetebilirken, DePIN hesaplamanın kaynak kullanımını yönetmek için merkezi bir yetkisi yoktur. Bu nedenle, DePIN'in kaynak kullanımını mümkün olduğunca stratejik bir şekilde belirlemesi özellikle önemlidir.

Daha büyük bir sorun ise merkezi olmayan GPU pazarının artık GPU sıkıntısıyla karşılaşmaması olabilir Mark Zuckerberg yakın zamanda verdiği bir röportajda, inandığını söyledi enerji yeni darboğaz olacak , bilgi işlem kaynakları yerine, çünkü şirketler artık bilgi işlem kaynaklarını şu anda yaptıkları gibi istiflemek yerine, ölçekte veri merkezleri inşa etmek için rekabet edecekler. Bu, elbette GPU maliyetlerinde potansiyel bir azalma anlamına geliyor, ancak aynı zamanda, tescilli veri merkezleri inşa etmek AI modeli performansı için genel çıtayı yükseltirse, AI girişimlerinin performans ve sağladıkları mal ve hizmetlerin kalitesi açısından büyük şirketlerle nasıl rekabet edecekleri sorusunu da gündeme getiriyor.

DePIN'leri hesaplama örneği

Tekrarlamak gerekirse, yapay zeka modellerinin karmaşıklığı ve bunlara bağlı işleme ve hesaplama gereksinimleri ile mevcut yüksek performanslı GPU'lar ve diğer hesaplama kaynakları arasındaki uçurum giderek büyüyor.

Bilgisayar DePIN'leri, günümüzde büyük donanım üreticileri ve bulut bilişim hizmet sağlayıcılarının hakim olduğu bilgisayar pazarlarında, aşağıdaki temel yeteneklere dayanarak yenilikçi ve bozucu olmaya hazırdır:

1) Mal ve hizmetlerin daha düşük maliyetle sunulmasını sağlamak.

2) Daha güçlü sansür karşıtı ve ağ dayanıklılığı koruması sağlayın.

3) Yapay zeka modellerinin ince ayar ve eğitim için mümkün olduğunca açık ve herkes tarafından kolayca erişilebilir olmasını gerektirebilecek potansiyel düzenleyici yönergelerden yararlanın.

Amerika Birleşik Devletleri'nde bilgisayar ve internet erişimi olan hanelerin yüzdesi katlanarak artarak 100%'ye yaklaştı. Yüzde ayrıca dünyanın birçok yerinde önemli ölçüde arttı. Bu, yeterli parasal teşvikler ve sorunsuz bir işlem süreci varsa boşta duran kaynaklarını ödünç vermeye istekli olacak potansiyel bilgi işlem kaynağı sağlayıcılarının (GPU sahipleri) sayısında bir artış olduğunu gösteriyor. Bu, elbette çok kaba bir tahmin, ancak bilgi işlem kaynaklarının sürdürülebilir bir paylaşım ekonomisini inşa etmek için temelin halihazırda mevcut olabileceğini gösteriyor.

Yapay zekaya ek olarak, gelecekte hesaplamaya yönelik talep kuantum hesaplama gibi birçok başka endüstriden gelecektir. Kuantum hesaplama pazarının büyüklüğünün 2023'te $928,8 milyondan 2030'da $6528,8 milyona çıkması bekleniyor , 32.1%'lik bir CAGR ile. Bu sektördeki üretim farklı türde kaynaklar gerektirecektir, ancak herhangi bir kuantum hesaplama DePIN'inin başlatılıp başlatılmayacağını ve bunların nasıl görüneceğini görmek ilginç olacaktır.

“Tüketici donanımında çalışan açık modellerden oluşan güçlü bir ekosistem, değerin yapay zeka tarafından oldukça merkezileştirildiği ve çoğu insan düşüncesinin birkaç kişi tarafından kontrol edilen merkezi sunucular tarafından okunduğu ve aracılık edildiği bir geleceğe karşı önemli bir korumadır. Bu modeller ayrıca kurumsal devlerden ve ordudan çok daha az risklidir.” — Vitalik Buterin

Büyük işletmeler DePIN'lerin hedef kitlesi olmayabilir ve olmayacaklardır da. Hesaplamalı DePIN'ler bireysel geliştiricileri, dağınık inşaatçıları, asgari fon ve kaynaklara sahip yeni kurulan şirketleri geri getirir. Daha bol miktarda bilgi işlem gücüyle etkinleştirilen boştaki arzın yenilikçi fikirlere ve çözümlere dönüştürülmesine olanak tanırlar. Yapay zeka şüphesiz milyarlarca insanın hayatını değiştirecektir. Yapay zekanın herkesin işini değiştirmesi konusunda endişelenmek yerine, yapay zekanın bireysel ve serbest çalışan girişimcileri, yeni kurulan şirketleri ve genel olarak halkı güçlendirebileceği fikrini teşvik etmeliyiz.

Bu makale internetten alınmıştır: DePIN izleme ekosisteminin kapsamlı yorumlanması

Orijinal | Odaily Planet Daily Yazarı | Nanzhi Son Haberlere Genel Bakış BTC spot ETF rotası kendini tekrar edecek mi? Bu sabah, Bloomberg ETF analisti James Seyffart X platformunda şunları yazdı: Beş potansiyel Ethereum spot ETF ihraççısı, Cboe BZX aracılığıyla ABD SEC'ye 19 b-4 revize belge sundu: Bunlar arasında: Fidelity, VanEck, Invesco/Galaxy, Ark/21 Shares ve Franklin yer alıyor. DTCC resmi web sitesi ayrıca VanEck spot Ethereum ETF'si VANECK ETHEREUM TR SHS'yi (kod ETHV) de listeledi. Öte yandan, SEC'lerin Ethereum spot ETF'si için beklentileri arttıkça, Grayscale Ethereum Trust'ın (ETHE) negatif prim oranı 11.82%'ye daraldı. İlgili kaynaklara göre, Grayscale, Ethereum Mini Trust 19 b-4 formuna ABD Menkul Kıymetler ve...