IOSG Ventures: จากซิลิกอนสู่ปัญญาประดิษฐ์ คำอธิบายโดยละเอียดของเทคโนโลยีการฝึกอบรมและการใช้เหตุผลของ AI

ผู้เขียนต้นฉบับ: IOSG Ventures

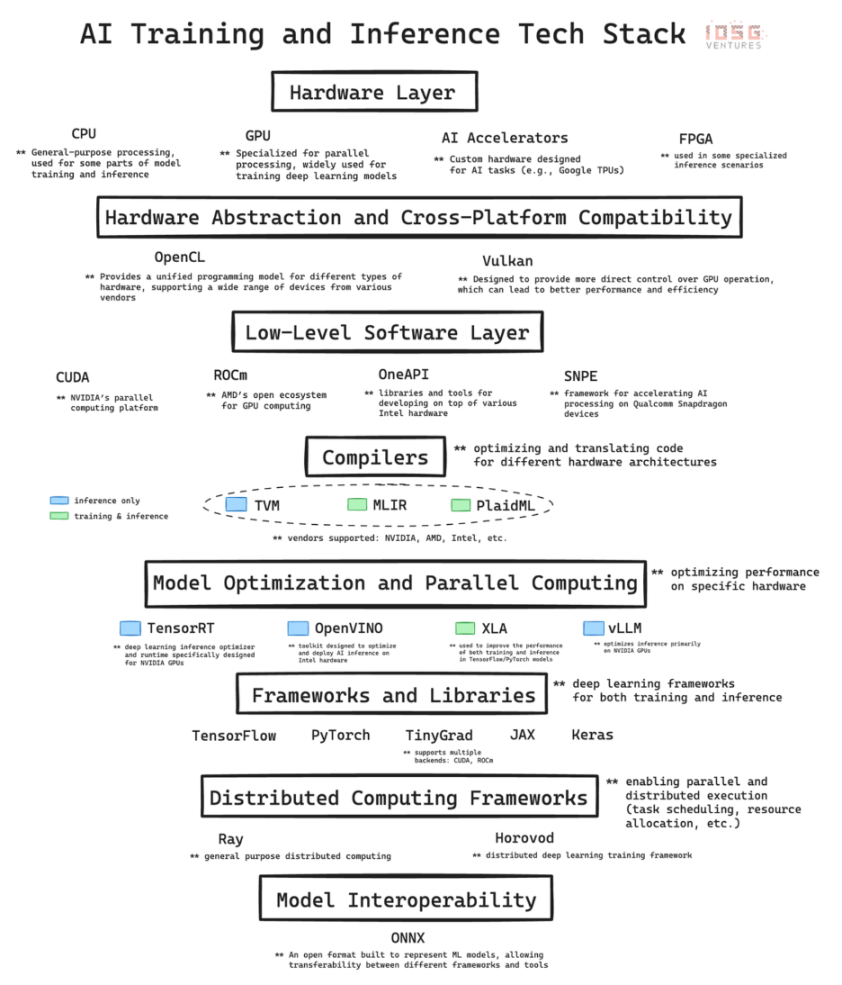

The rapid development of artificial intelligence is based on a complex infrastructure. The AI technology stack is a layered architecture consisting of hardware and software, which is the backbone of the current AI revolution. Here, we will analyze the main layers of the technology stack in depth and explain the contribution of each layer to AI development and implementation. Finally, we will reflect on the importance of mastering these basics, especially when evaluating opportunities in the intersection of cryptocurrency and AI, such as DePIN (decentralized physical infrastructure) projects, such as GPU networks.

1. Hardware layer: Silicon foundation

At the lowest level is the hardware, which provides the physical computing power for artificial intelligence.

-

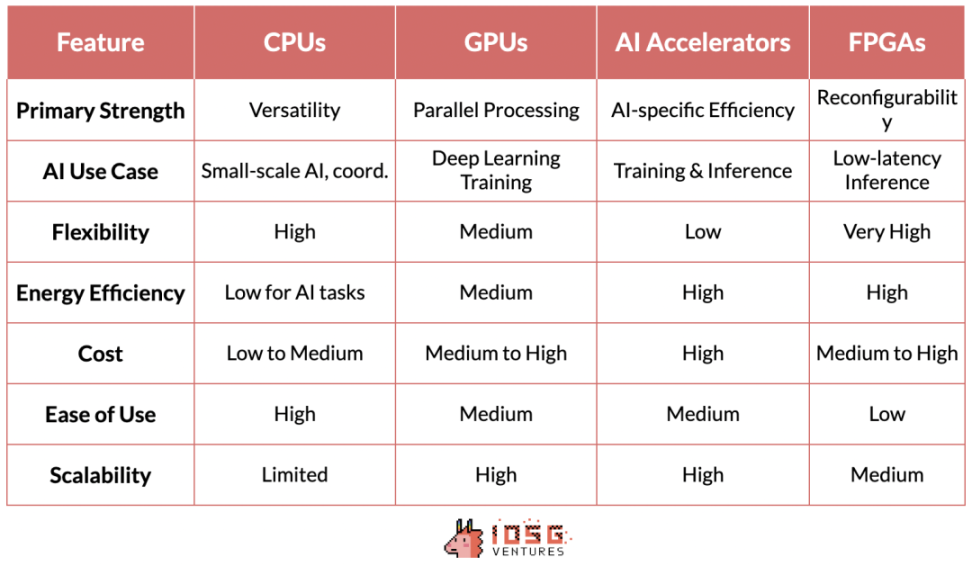

CPU (Central Processing Unit): is the basic processor for computing. They excel at handling sequential tasks and are important for general computing, including data preprocessing, small-scale artificial intelligence tasks, and coordinating other components.

-

GPU (Graphics Processing Unit): Originally designed for graphics rendering, it has become an important component of artificial intelligence due to its ability to perform a large number of simple calculations simultaneously. This parallel processing capability makes GPUs ideal for training deep learning models, and without the development of GPUs, modern GPT models would not be possible.

-

AI accelerators: Chips designed specifically for AI workloads that are optimized for common AI operations, delivering high performance and energy efficiency for both training and inference tasks.

-

FPGA (Field-Programmable Array Logic): Provides flexibility with its reprogrammable nature. They can be optimized for specific AI tasks, especially in inference scenarios that require low latency.

2. Bottom-level software: middleware

This layer in the AI technology stack is crucial because it builds a bridge between high-level AI frameworks and the underlying hardware. Technologies such as CUDA, ROCm, OneAPI, and SNPE strengthen the connection between high-level frameworks and specific hardware architectures, achieving performance optimization.

As NVIDIAs proprietary software layer, CUDA is the cornerstone of the companys rise in the AI hardware market. NVIDIAs leadership is not only due to its hardware advantages, but also reflects the strong network effects of its software and ecosystem integration.

CUDA has such a great influence because it is deeply integrated into the AI technology stack and provides a set of optimized libraries that have become the de facto standard in the field. This software ecosystem has built a strong network effect: AI researchers and developers who are proficient in CUDA spread its use to academia and industry during training.

The resulting virtuous cycle strengthens NVIDIA鈥檚 market leadership as the CUDA-based ecosystem of tools and libraries becomes increasingly indispensable to AI practitioners.

This symbiosis of hardware and software not only solidifies NVIDIAs position at the forefront of AI computing, it also gives the company significant pricing power, which is rare in the typically commoditized hardware market.

CUDAs dominance and the relative obscurity of its competitors can be attributed to a number of factors that have created significant barriers to entry. NVIDIAs first-mover advantage in GPU-accelerated computing enabled CUDA to build a strong ecosystem before competitors gained a foothold. Although competitors such as AMD and Intel have excellent hardware, their software layers lack the necessary libraries and tools and cannot be seamlessly integrated with existing technology stacks, which is why there is a huge gap between NVIDIA/CUDA and other competitors.

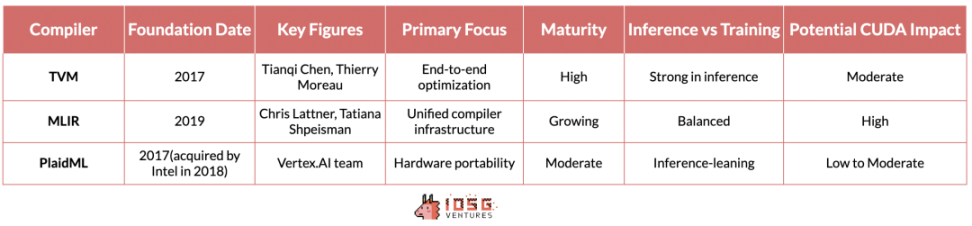

3. Compiler: Translator

TVM (Tensor Virtual Machine), MLIR (Multi-Layer Intermediate Representation), and PlaidML provide different solutions to the challenge of optimizing AI workloads across multiple hardware architectures.

TVM originated from research at the University of Washington and has quickly gained attention for its ability to optimize deep learning models for a variety of devices, from high-performance GPUs to resource-constrained edge devices. Its advantage lies in the end-to-end optimization process, which is particularly effective in inference scenarios. It completely abstracts the differences in underlying vendors and hardware, allowing inference workloads to run seamlessly on different hardware, whether it is NVIDIA devices or AMD, Intel, etc.

Beyond inference, however, the picture becomes more complicated. The ultimate goal of hardware-replaceable computation for AI training remains unsolved. However, there are several initiatives worth mentioning in this regard.

MLIR, Googles project, takes a more fundamental approach. By providing a unified intermediate representation for multiple levels of abstraction, it aims to simplify the entire compiler infrastructure for both inference and training use cases.

PlaidML, now led by Intel, is positioning itself as the dark horse in this race. It is focusing on portability across multiple hardware architectures (including those beyond traditional AI accelerators), envisioning a future where AI workloads run seamlessly on a variety of computing platforms.

If any of these compilers can be well integrated into the technology stack, without affecting model performance and without requiring developers to make any additional modifications, this is likely to threaten CUDAs moat. However, MLIR and PlaidML are not mature enough and are not well integrated into the AI technology stack, so they do not currently pose a clear threat to CUDAs leadership.

4. Distributed computing: coordinator

Ray and Horovod represent two different approaches to distributed computing in the AI field, each addressing the key need for scalable processing in large-scale AI applications.

Ray, developed by UC Berkeleys RISELab, is a general-purpose distributed computing framework. It excels in flexibility, allowing the distribution of various types of workloads beyond machine learning. The actor-based model in Ray greatly simplifies the parallelization process of Python code, making it particularly suitable for reinforcement learning and other artificial intelligence tasks that require complex and diverse workflows.

Horovod, originally designed by Uber, focuses on the distributed implementation of deep learning. It provides a concise and efficient solution for scaling deep learning training processes across multiple GPUs and server nodes. The highlight of Horovod is its user-friendliness and optimization for data-parallel training of neural networks, which enables it to perfectly integrate with mainstream deep learning frameworks such as TensorFlow and PyTorch, allowing developers to easily scale their existing training codes without making extensive code modifications.

5. Conclusion: From the perspective of cryptocurrency

Integration with existing AI stacks is critical to the DePin project, which aims to build a distributed computing system. This integration ensures compatibility with current AI workflows and tools, lowering the barrier to adoption.

In the cryptocurrency space, the current GPU network, which is essentially a decentralized GPU rental platform, marks the initial steps towards more sophisticated distributed AI infrastructure. These platforms are more like Airbnb-style ตลาดs rather than operating as distributed clouds. Although they are useful for some applications, these platforms are not yet sufficient to support true distributed training, which is a key requirement for advancing large-scale AI development.

Current distributed computing standards like Ray and Horovod are not designed for globally distributed networks. For a truly working decentralized network, we need to develop another framework on this layer. Some skeptics even believe that since Transformer models require intensive communication and optimization of global functions during learning, they are incompatible with distributed training methods. On the other hand, optimists are trying to come up with new distributed computing frameworks that work well with globally distributed hardware. Yotta is one of the startups trying to solve this problem.

NeuroMesh goes a step further. It redesigns the machine learning process in a particularly innovative way. By using predictive coding networks (PCNs) to find convergence to local error minimization, rather than directly finding the optimal solution to the global loss function, NeuroMesh solves a fundamental bottleneck in distributed AI training.

This approach not only enables unprecedented parallelization, but also makes it possible to train models on consumer-grade GPU hardware (such as RTX 4090), thereby democratizing AI training. Specifically, the 4090 GPU has similar computing power to the H100, but they are not fully utilized during model training due to insufficient bandwidth. Because PCN reduces the importance of bandwidth, it makes it possible to utilize these low-end GPUs, which may bring significant cost savings and efficiency improvements.

GenSyn, another ambitious crypto AI startup, aims to build a set of compilers. Gensyns compilers allow any type of computing hardware to be seamlessly used for AI workloads. For example, just like TVM does for inference, GenSyn is trying to build similar tools for model training.

If successful, it could significantly expand the capabilities of decentralized AI computing networks to handle more complex and diverse AI tasks by efficiently utilizing a variety of hardware. This ambitious vision, while challenging due to the complexity and high technical risk of optimizing across diverse hardware architectures, could weaken the moat of CUDA and NVIDIA if they can execute on this vision and overcome obstacles such as maintaining heterogeneous system performance.

About reasoning: Hyperbolics approach, combining verifiable reasoning with a decentralized network of heterogeneous computing resources, embodies a relatively pragmatic strategy. By leveraging compiler standards such as TVM, Hyperbolic can take advantage of a wide range of hardware configurations while maintaining performance and reliability. It can aggregate chips from multiple vendors (from NVIDIA to AMD, Intel, etc.), including consumer-grade hardware and high-performance hardware.

These developments at the intersection of crypto-AI portend a future in which AI computing could become more distributed, efficient, and accessible. The success of these projects will depend not only on their technical merits, but also on their ability to seamlessly integrate with existing AI workflows and address practical concerns of AI practitioners and businesses.

This article is sourced from the internet: IOSG Ventures: From silicon to intelligence, a detailed explanation of the AI training and reasoning technology stack

Related: SignalPlus Volatility Column (20240719): A Basin of Cold Water

The bankrupt Bitcoin exchange Mt.Gox reported multiple unauthorized attempts to log into creditor accounts in the past 24 hours, causing creditors to worry about the safety of their remaining assets and possible selling pressure on the currency price. The market may be worried that the incident will have an adverse impact on the short-term currency price. Coupled with the sharp drop in ETF traffic in the past two days, the gradually spreading risk aversion has spawned a sell-off of assets, causing the BTC price to pull back below $64,000, testing the key support level again. Source: TradingView; Farside Investors In terms of options, the overall implied volatility level has been relatively stable since yesterdays sharp decline, but it is still at a recent high. There are three changes worth noting.…