Original author: Jane Doe, Chen Li

원본 출처: Youbi Capital

1 The intersection of AI and Crypto

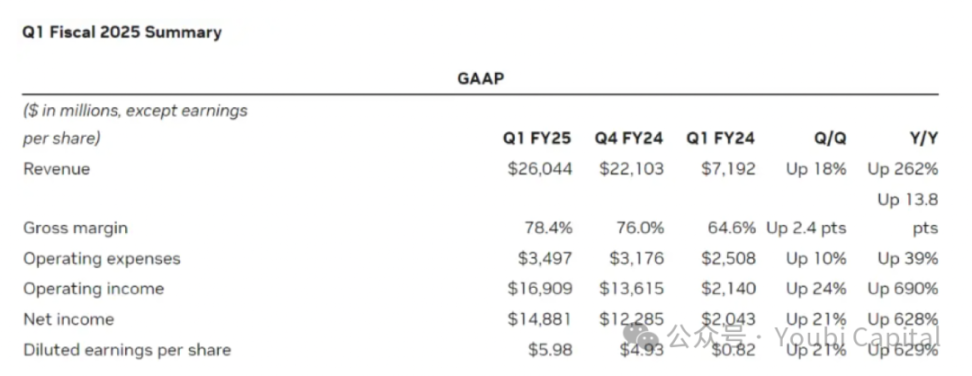

On May 23, chip giant Nvidia released its first quarter financial report for fiscal year 2025. The financial report shows that Nvidias revenue in the first quarter was US$26 billion. Among them, data center revenue increased by 427% over last year to a staggering US$22.6 billion. Behind Nvidias financial performance, which can save the U.S. stock market on its own, is the demand for computing power that has exploded among global technology companies in order to compete in the AI track. The more top technology companies are ambitious in their layout of the AI track, the more their demand for computing power has increased exponentially. According to TrendForces forecast, in 2024, the demand for high-end AI servers from the four major cloud service providers in the United States: Microsoft, Google, AWS and Meta are expected to account for 20.2%, 16.6%, 16% and 10.8% of global demand, respectively, totaling more than 60%.

Image source: https://investor.nvidia.com/financial-info/financial-reports/default.aspx

Chip shortage has been the annual buzzword for several years. On the one hand, the training and inference of large language models (LLMs) require a lot of computing power support; and with the iteration of the model, the computing power cost and demand increase exponentially. On the other hand, large companies like Meta will purchase a huge number of chips, and the global computing power resources are tilted towards these technology giants, making it increasingly difficult for small businesses to obtain the required computing power resources. The difficulties faced by small businesses come not only from the insufficient supply of chips caused by the surge in demand, but also from the structural contradictions of supply. At present, there are still a large number of idle GPUs on the supply side. For example, some data centers have a large amount of idle computing power (the utilization rate is only 12%-18%), and a large amount of computing power resources are also idle in crypto mining due to the reduction in profits. Although these computing powers are not all suitable for professional application scenarios such as AI training, consumer-grade hardware can still play a huge role in other fields such as AI inference, cloud game rendering, and cloud phones. The opportunity to integrate and utilize this part of computing power resources is huge.

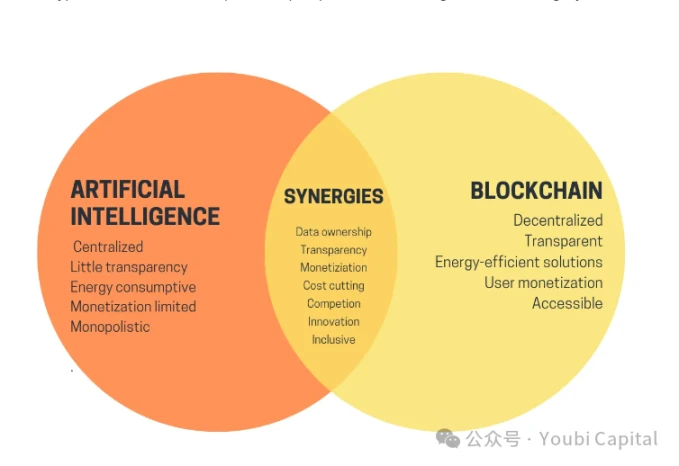

Turning our attention from AI to crypto, after three years of silence in the crypto market, another bull market has finally arrived. Bitcoin prices have hit new highs, and various memecoins have emerged one after another. Although AI and Crypto have been popular as buzzwords for these years, artificial intelligence and blockchain, as two important technologies, are like two parallel lines, and have not yet found an intersection point. At the beginning of this year, Vitalik published an article titled The promise and challenges of crypto + AI applications, discussing the future scenarios of the combination of AI and crypto. Vitalik mentioned a lot of visions in the article, including the use of encryption technologies such as blockchain and MPC to decentralize the training and inference of AI, which can open the black box of machine learning and make the AI model more trustless, etc. There is still a long way to go to realize these visions. But one of the use cases mentioned by Vitalik – using cryptos economic incentives to empower AI, is also an important direction that can be realized in a short time. The decentralized computing power network is one of the most suitable scenarios for AI + crypto at this stage.

2 Decentralized computing network

At present, there are already many projects developing in the field of decentralized computing power network. The underlying logic of these projects is similar, which can be summarized as: using tokens to incentivize computing power holders to participate in the network to provide computing power services, and these scattered computing power resources can be aggregated into a decentralized computing power network of a certain scale. This can not only improve the utilization rate of idle computing power, but also meet the computing power needs of customers at a lower cost, achieving a win-win situation for both buyers and sellers.

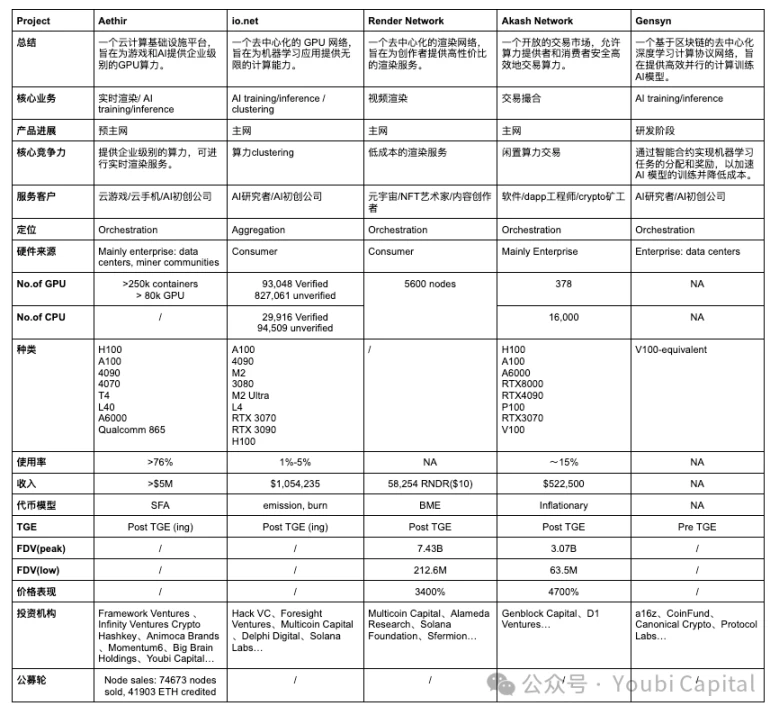

In order to enable readers to gain an overall understanding of this track in a short period of time, this article will deconstruct specific projects and the entire track from two perspectives: micro and macro, aiming to provide readers with an analytical perspective to understand the core competitive advantages of each project and the overall development of the decentralized computing power track. The author will introduce and analyze five projects: Aethir, io.net, Render Network, Akash Network, Gensyn , and summarize and evaluate the project status and track development.

From the perspective of the analytical framework, if we focus on a specific decentralized computing network, we can break it down into four core components:

-

Hardware network : It integrates scattered computing resources and realizes the sharing and load balancing of computing resources through nodes distributed around the world. It is the basic layer of the decentralized computing network.

-

Two-sided market : Match computing power providers with demanders through reasonable pricing and discovery mechanisms, provide a secure trading platform, and ensure that transactions between supply and demand parties are transparent, fair, and credible.

-

Consensus mechanism : used to ensure that nodes in the network run correctly and complete their work. The consensus mechanism is mainly used to monitor two levels: 1) monitor whether the node is online and in an active state that can accept tasks at any time; 2) node work proof: the node effectively and correctly completes the task after receiving the task, and the computing power is not used for other purposes and occupies processes and threads.

-

Token incentives : The token model is used to incentivize more participants to provide/use services, and use tokens to capture this network effect and achieve community benefit sharing.

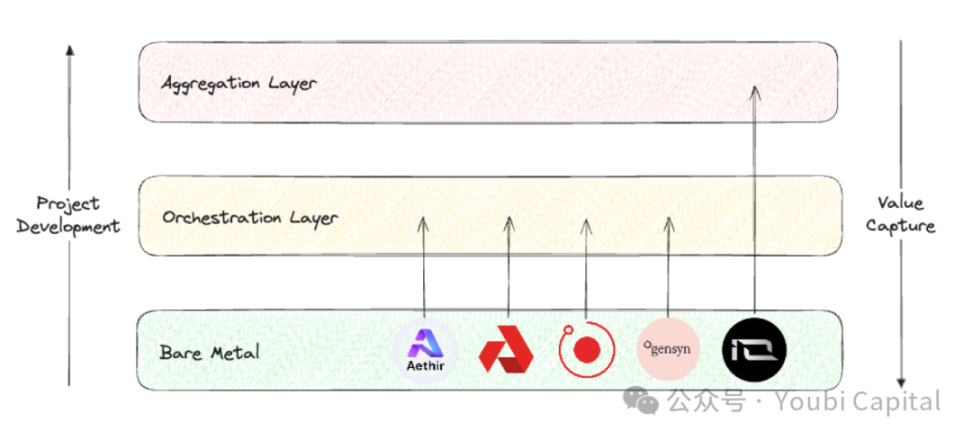

If we take a birds eye view of the entire decentralized computing power track, the research report of Blockworks Research provides a good analysis framework. We can divide the project positions of this track into three different layers.

-

Bare metal layer : The basic layer of the decentralized computing stack. Its main task is to collect raw computing resources and make them available for API calls.

-

Orchestration layer : The middle layer of the decentralized computing stack. Its main tasks are coordination and abstraction. It is responsible for the scheduling, expansion, operation, load balancing and fault tolerance of computing power. Its main function is to abstract the complexity of the underlying hardware management and provide a more advanced user interface for end users to serve specific customer groups.

-

Aggregation layer : It constitutes the top layer of the decentralized computing stack. Its main task is integration. It is responsible for providing a unified interface so that users can implement multiple computing tasks in one place, such as AI training, rendering, zkML, etc. It is equivalent to the orchestration and distribution layer of multiple decentralized computing services.

Image source: Youbi Capital

Based on the above two analysis frameworks, we will make a horizontal comparison of the five selected projects and evaluate them from four levels: core business, market positioning, hardware facilities and financial performance .

2.1 Core Business

From the underlying logic, the decentralized computing network is highly homogenized, that is, using tokens to incentivize idle computing power holders to provide computing power services. Based on this underlying logic, we can understand the differences in the core business of the project from three aspects:

-

Sources of idle computing power:

-

There are two main sources of idle computing power in the market: 1) idle computing power in the hands of data centers, miners and other companies; 2) idle computing power in the hands of retail investors. The computing power of data centers is usually professional-grade hardware, while retail investors usually buy consumer-grade chips.

-

The computing power of Aethir, Akash Network and Gensyn is mainly collected from enterprises. The benefits of collecting computing power from enterprises are: 1) Enterprises and data centers usually have higher quality hardware and professional maintenance teams, and the performance and reliability of computing power resources are higher; 2) The computing power resources of enterprises and data centers are often more homogeneous, and centralized management and monitoring make resource scheduling and maintenance more efficient. However, correspondingly, this method has higher requirements for the project party, and the project party needs to have commercial connections with the enterprise that controls the computing power. At the same time, scalability and decentralization will be affected to a certain extent.

-

Render Network and io.net mainly encourage retail investors to provide their idle computing power. The benefits of collecting computing power from retail investors are: 1) The explicit cost of retail investors idle computing power is low, which can provide more economical computing power resources; 2) The network is more scalable and decentralized, which enhances the elasticity and robustness of the system. The disadvantage is that retail resources are widely distributed and not uniform, which makes management and scheduling complicated and increases the difficulty of operation and maintenance. It is also more difficult to form an initial network effect by relying on retail computing power (harder to kickstart). Finally, retail devices may have more security risks, which will bring the risk of data leakage and computing power abuse.

-

Computing power consumers

-

From the perspective of computing power consumers, the target customers of Aethir, io.net, and Gensyn are mainly enterprises. For B-side customers, AI and real-time rendering of games require high-performance computing needs. This type of workload has extremely high requirements for computing power resources, usually requiring high-end GPUs or professional-grade hardware. In addition, B-side customers have high requirements for the stability and reliability of computing power resources, so high-quality service level agreements must be provided to ensure the normal operation of the project and provide timely technical support. At the same time, the migration cost of B-side customers is very high. If the decentralized network does not have a mature SDK that allows the project party to quickly deploy (for example, Akash Network requires users to develop based on remote ports themselves), it will be difficult for customers to migrate. If it were not for the extremely significant price advantage, the customers willingness to migrate would be very low.

-

Render Network and Akash Network mainly provide computing power services for retail investors. To provide services for C-end users, projects need to design simple and easy-to-use interfaces and tools to provide consumers with a good consumer experience. Consumers are also very sensitive to prices, so projects need to provide competitive pricing.

-

Hardware Type

-

Common computing hardware resources include CPU, FPGA, GPU, ASIC and SoC. These hardwares have significant differences in design goals, performance characteristics and application areas. In summary, CPU is better at general computing tasks, FPGAs advantages are high parallel processing and programmability, GPU performs well in parallel computing, ASIC is most efficient in specific tasks, and SoC integrates multiple functions in one, suitable for highly integrated applications. Which hardware to choose depends on the needs of the specific application, performance requirements and cost considerations. The decentralized computing power projects we discussed are mostly for collecting GPU computing power, which is determined by the project business type and the characteristics of the GPU. Because GPU has unique advantages in AI training, parallel computing, multimedia rendering and other aspects.

-

Although most of these projects involve GPU integration, different applications have different requirements for hardware specifications, so these hardware have heterogeneous optimization cores and parameters. These parameters include parallelism/serial dependencies, memory, latency, and so on. For example, rendering workloads are actually more suitable for consumer-grade GPUs rather than more powerful data center GPUs, because rendering has high requirements for ray tracing, and consumer-grade chips such as 4090 s have enhanced RT cores and are specifically optimized for ray tracing tasks. AI training and inference require professional-level GPUs. Therefore, Render Network can collect consumer-grade GPUs such as RTX 3090 s and 4090 s from retail investors, while IO.NET needs more professional-level GPUs such as H 100 s and A 100 s to meet the needs of AI startups.

2.2 Market Positioning

In terms of project positioning, the bare metal layer, orchestration layer and aggregation layer have different core issues to solve, optimization focuses and value capture capabilities.

-

The bare metal layer focuses on the collection and utilization of physical resources, while the orchestration layer focuses on the scheduling and optimization of computing power, optimizing the design of physical hardware according to the needs of customer groups. The aggregation layer is general purpose, focusing on the integration and abstraction of different resources. From the perspective of the value chain, each project should start from the bare metal layer and strive to climb upwards.

-

From the perspective of value capture, the ability to capture value increases layer by layer from the bare metal layer, orchestration layer to the aggregation layer. The aggregation layer can capture the most value because the aggregation platform can obtain the greatest network effect and directly reach the most users, which is equivalent to the traffic entrance of the decentralized network, thus occupying the highest value capture position in the entire computing resource management stack.

-

Correspondingly, building an aggregation platform is the most difficult. The project needs to comprehensively solve many problems, including technical complexity, heterogeneous resource management, system reliability and scalability, network effect realization, security and privacy protection, and complex operation and maintenance management. These challenges are not conducive to the cold start of the project and depend on the development and timing of the track. It is not realistic to build an aggregation layer before the orchestration layer has matured and occupied a certain market share.

-

현재, Aethir, Render Network, Akash Network and Gensyn all belong to the Orchestration layer, and they are designed to provide services for specific targets and customer groups. Aethirs current main business is real-time rendering for cloud games, and provides certain development and deployment environments and tools for B-side customers; Render Networks main business is video rendering, Akash Networks mission is to provide a trading platform similar to Taobao, and Gensyn is deeply involved in the field of AI training. io.net is positioned as an Aggregation layer, but the functions currently implemented by io are still some distance away from the complete functions of the aggregation layer. Although the hardware of Render Network and Filecoin has been collected, the abstraction and integration of hardware resources have not yet been completed.

2.3 Hardware facilities

-

At present, not all projects have released detailed network data. Relatively speaking, the UI of io.net explorer is the best, where you can see the number, type, price, distribution, network usage, node income and other parameters of GPU/CPU. However, at the end of April, the front end of io.net was attacked. Since io did not perform Auth on the PUT/POST interface, hackers tampered with the front end data. This also sounded the alarm for the privacy of other projects and the reliability of network data.

-

In terms of the number and model of GPUs, io.net, as an aggregation layer, should have collected the most hardware. Aethir follows closely behind, and the hardware situation of other projects is not so transparent. From the GPU model, we can see that io has both professional-grade GPUs such as A 100 and consumer-grade GPUs such as 4090, with a wide variety, which is in line with the positioning of io.net aggregation. io can choose the most suitable GPU according to specific task requirements. However, GPUs of different models and brands may require different drivers and configurations, and the software also needs to be complexly optimized, which increases the complexity of management and maintenance. At present, the allocation of various tasks in io is mainly based on user selection.

-

Aethir released its own mining machine. In May, Aethir Edge, which was developed with support from Qualcomm, was officially launched. It will break the single centralized GPU cluster deployment mode far away from users and deploy computing power to the edge. Aethir Edge will combine the cluster computing power of H100 to serve AI scenarios. It can deploy trained models and provide users with inference computing services at the best cost. This solution is closer to users, provides faster services, and is more cost-effective.

-

From the perspective of supply and demand, taking Akash Network as an example, its statistics show that the total number of CPUs is about 16k and the number of GPUs is 378. According to the network rental demand, the utilization rates of CPUs and GPUs are 11.1% and 19.3% respectively. Among them, only the professional-grade GPU H 100 has a relatively high rental rate, and other models are mostly idle. The situation faced by other networks is roughly the same as Akash. The overall demand for the network is not high. Except for popular chips such as A 100 and H 100, other computing power is mostly idle.

-

From the perspective of price advantage, except for the giants in the cloud computing market, the cost advantage is not prominent compared with other traditional service providers.

2.4 Financial Performance

-

Regardless of how the token model is designed, a healthy tokenomics needs to meet the following basic conditions: 1) User demand for the network needs to be reflected in the coin price, which means that the token can capture value; 2) All participants, whether developers, nodes, or users, need to receive long-term and fair incentives; 3) Ensure decentralized governance to avoid excessive holdings by insiders; 4) Reasonable inflation and deflation mechanisms and token release cycles to avoid large fluctuations in coin prices that affect the robustness and sustainability of the network.

-

If we generally divide the token model into BME (burn and mint equilibrium) and SFA (stake for access), the sources of deflationary pressure on the tokens of these two models are different: the BME model burns tokens after users purchase services, so the deflationary pressure of the system is determined by demand. SFA requires service providers/nodes to stake tokens to obtain the qualification to provide services, so the deflationary pressure is brought by supply. The advantage of BME is that it is more suitable for non-standardized goods. However, if the demand for the network is insufficient, it may face the pressure of continued inflation. The token models of various projects differ in details, but in general, Aethir prefers SFA, while io.net, Render Network and Akash Network prefer BME, and Gensyn is still unknown.

-

From the revenue perspective, the demand for the network will be directly reflected in the overall network revenue (miners’ revenue is not discussed here, because miners receive subsidies from the project in addition to the rewards for completing tasks). From the public data, io.net has the highest value. Although Aethir’s revenue has not yet been announced, according to public information, they have announced that they have signed orders with many B-side customers.

-

In terms of coin prices, only Render Network and Akash Network have conducted ICOs. Aethir and io.net have also recently issued coins, and their price performance needs to be observed, so we will not discuss them in detail here. Gensyns plan is still unclear. From the two projects that have issued coins and the projects that have issued coins in the same track but are not included in the scope of this article, in general, the decentralized computing power network has very impressive price performance, which to a certain extent reflects the huge market potential and high expectations of the community.

2.5 Summary

-

The decentralized computing network track has developed rapidly overall, and many projects can already rely on products to serve customers and generate certain income. The track has moved away from pure narrative and entered a development stage where it can provide preliminary services.

-

Weak demand is a common problem faced by decentralized computing networks, and long-term customer demand has not been well verified and explored. However, the demand side has not affected the price of coins too much, and several projects that have already issued coins have performed well.

-

AI is the main narrative of the decentralized computing network, but it is not the only business. In addition to being used for AI training and inference, computing power can also be used for real-time rendering of cloud games, cloud mobile phone services, and more.

-

The hardware heterogeneity of the computing power network is relatively high, and the quality and scale of the computing power network need to be further improved.

-

For C-end users, the cost advantage is not very obvious. For B-end users, in addition to cost savings, they also need to consider the stability, reliability, technical support, compliance and legal support of the service, etc., and Web3 projects generally do not do well in these aspects.

3 Closing thoughts

The explosive growth of AI has created a huge demand for computing power. Since 2012, the computing power used in AI training tasks has been growing exponentially, currently doubling every 3.5 months (compared to Moores Law of doubling every 18 months). Since 2012, the demand for computing power has grown more than 300,000 times, far exceeding Moores Laws 12-fold growth. According to forecasts, the GPU market is expected to grow at a compound annual growth rate of 32% to more than $200 billion over the next five years. AMDs estimate is even higher, and the company expects the GPU chip market to reach $400 billion by 2027.

Image source: https://www.stateof.ai/

Because the explosive growth of artificial intelligence and other computationally intensive workloads (such as AR/VR rendering) has exposed structural inefficiencies in traditional cloud computing and leading computing markets. In theory, decentralized computing networks can provide more flexible, low-cost and efficient solutions by utilizing distributed idle computing resources, thereby meeting the markets huge demand for computing resources. Therefore, the combination of crypto and AI has huge market potential, but it also faces fierce competition from traditional companies, high entry barriers and a complex market environment. In general, looking at all crypto tracks, decentralized computing networks are one of the most promising verticals in the crypto field that can meet real needs.

Image source : https://vitalik.eth.limo/general/2024/01/30/cryptoai.html

The future is bright, but the road is tortuous. To achieve the above vision, we still need to solve many problems and challenges. In summary: at this stage, if we only provide traditional cloud services, the projects profit margin is very small. From the demand side, large enterprises generally build their own computing power, and pure C-end developers mostly choose cloud services. Whether small and medium-sized enterprises that truly use decentralized computing power network resources will have stable demand still needs further exploration and verification. On the other hand, AI is a vast market with a very high upper limit and imagination space. For a broader market, decentralized computing power service providers will also need to transform to model/AI services in the future, explore more crypto + AI usage scenarios, and expand the value that the project can create. But at present, there are still many problems and challenges to further develop into the field of AI:

-

The price advantage is not outstanding : From the previous data comparison, it can be seen that the cost advantage of the decentralized computing network has not been reflected. The possible reason is that for professional chips such as H100 and A100, which are in high demand, the market mechanism determines that the price of this part of the hardware will not be cheap. In addition, although the decentralized network can collect idle computing resources, the lack of economies of scale brought by decentralization, high network and bandwidth costs, and extremely complex management and operation and maintenance will further increase the computing cost.

-

The particularity of AI training : There are huge technical bottlenecks in the current stage of AI training in a decentralized manner. This bottleneck can be intuitively reflected in the GPU workflow. In the training of large language models, the GPU first receives the preprocessed data batches and performs forward propagation and back propagation calculations to generate gradients. Next, each GPU aggregates the gradients and updates the model parameters to ensure that all GPUs are synchronized. This process will be repeated until all batches of training are completed or the predetermined number of rounds is reached. This process involves a large amount of data transmission and synchronization. What kind of parallel and synchronization strategies to use, how to optimize network bandwidth and latency, and reduce communication costs, etc., have not yet been well answered. At this stage, it is not very realistic to use a decentralized computing power network to train AI.

-

Data security and privacy : During the training of large language models, all aspects of data processing and transmission, such as data distribution, model training, parameter and gradient aggregation, may affect data security and privacy. In addition, data privacy is more important than model privacy. If the problem of data privacy cannot be solved, it will not be possible to truly scale up on the demand side.

From the most realistic perspective, a decentralized computing network needs to take into account both current demand discovery and future market space. Find the right product positioning and target customer groups, such as targeting non-AI or Web3 native projects first, starting with relatively marginal needs, and building an early user base. At the same time, continue to explore various scenarios combining AI and crypto, explore the forefront of technology, and achieve transformation and upgrading of services.

참고자료

https://vitalik.eth.limo/general/2024/01/30/cryptoai.html

https://foresightnews.pro/article/detail/34368

https://research.web3 caff.com/zh/archives/17351?ref=1554

This article is sourced from the internet: Born at the edge: How does the decentralized computing power network empower Crypto and AI?

Related: Billions of Shiba Inu (SHIB) Tokens Burned Accidentally in April

In Brief 1.69 billion Shiba Inu (SHIB) tokens burned in April. Burns were accidental, posing potential price impacts. Market shows mixed signals, with possible bullish trend. The Shiba Inu (SHIB) community observed the burning of approximately 1.69 billion SHIB tokens throughout April, distributed across 204 distinct transactions. This significant reduction in available Shiba Inu tokens was not the result of strategic market maneuvers but somewhat accidental transfers, which could seriously affect Shiba Inu’s price. Market Reacts to 1.69 Billion SHIB Burned According to ShibBurn, the spike in token burns was primarily due to users erroneously sending SHIB to the Contract Address. “This isn’t due to big news or anything as many reported… it’s just folks making mistakes, sending their tokens to the CA, and losing their investments. Be careful and…